How to organise code in the large scale

John R Spray

Last update: 2023-10-25

This online book is a work in progress. Feedback is welcome.

Please leave a comment at the end of this book Link to comments section at end of book.

Or e-mail johnspray274<at>gmail<dot>com

Summary

Intuitively, good quality software would allow you to read and understand any one part of the code without also having to read and understand any other part. I call this zero coupling. We are taught the meme loose coupling and high cohesion. Unfortunately, this implies that zero coupling is not achievable. It is said that a zero coupled system would not do anything. But this is only because we confuse design-time coupling with run-time communications. It is entirely possible for parts of a system to have zero knowledge about one another at design-time and still communicate at run-time. We define the word coupling to mean design-time coupling throughout this book. That’s the coupling that matters. By design-time, we mean any time you are reading, writing or understanding code. While understanding code in one module, how much do you have to know about code inside other modules?

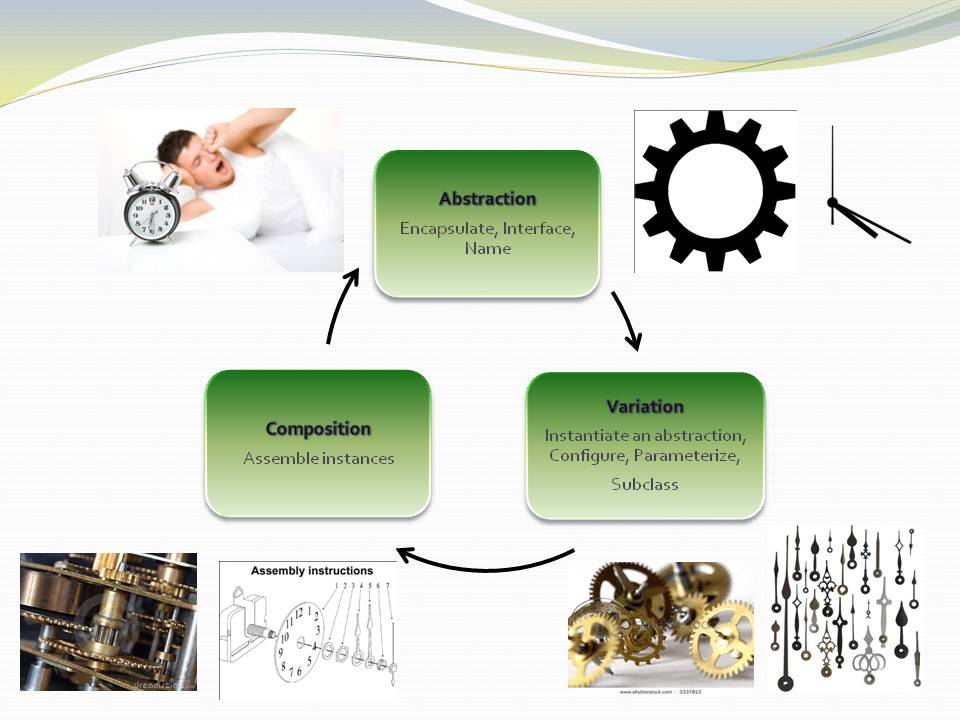

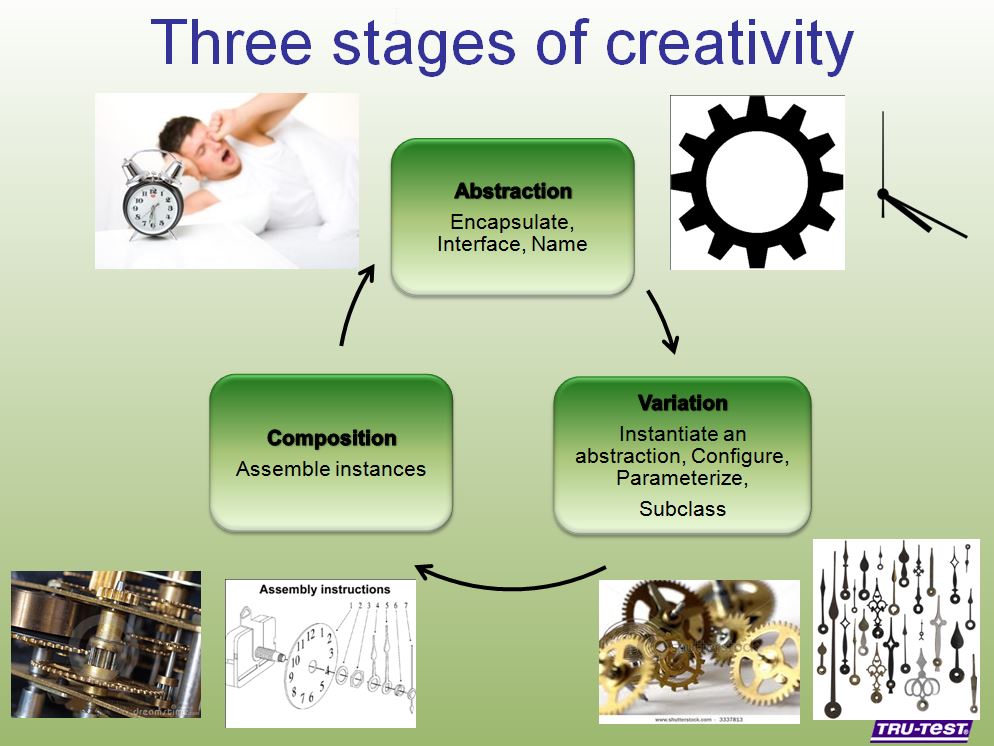

We hear the terms compile-time and run-time often, but it is design-time where complexity happens. Modules in conventional code tend to have a lot of coupling in the form of design-time collaboration with one another that causes that complexity. ALA converts that collaborative coupling into cohesion contained in a new module. Here’s how:

At its core, ALA is a set of three architectural constraints:

-

The only unit of code is an abstraction.

-

The only relationship allowed is a dependency on an abstraction that is significantly more abstract.

-

All abstractions must be small - as a guide, less then about 500 LOC.

Many patterns and properties emerge from these three constraints. These are explored throughout this book. But first let’s clarify the three constraints.

The only unit of code is an abstraction

Abstractions are design-time modules. An abstraction is more than a module or encapsulation, which are compiler-time concepts. We call what our brain uses abstractions, and since we are talking about design-time, that’s what we need to call the primary artefact of composition in our software.

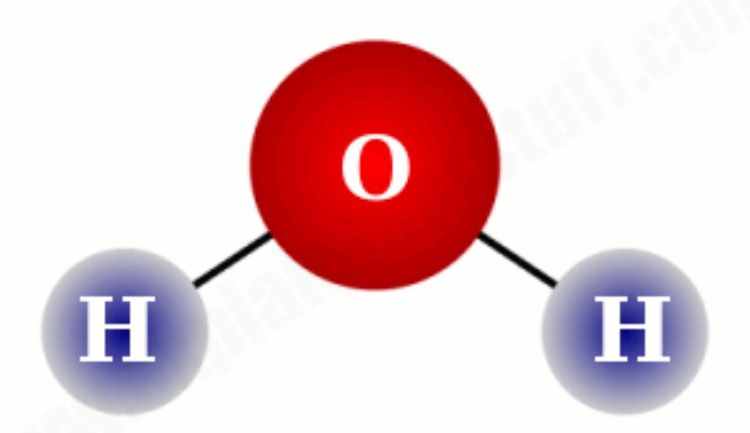

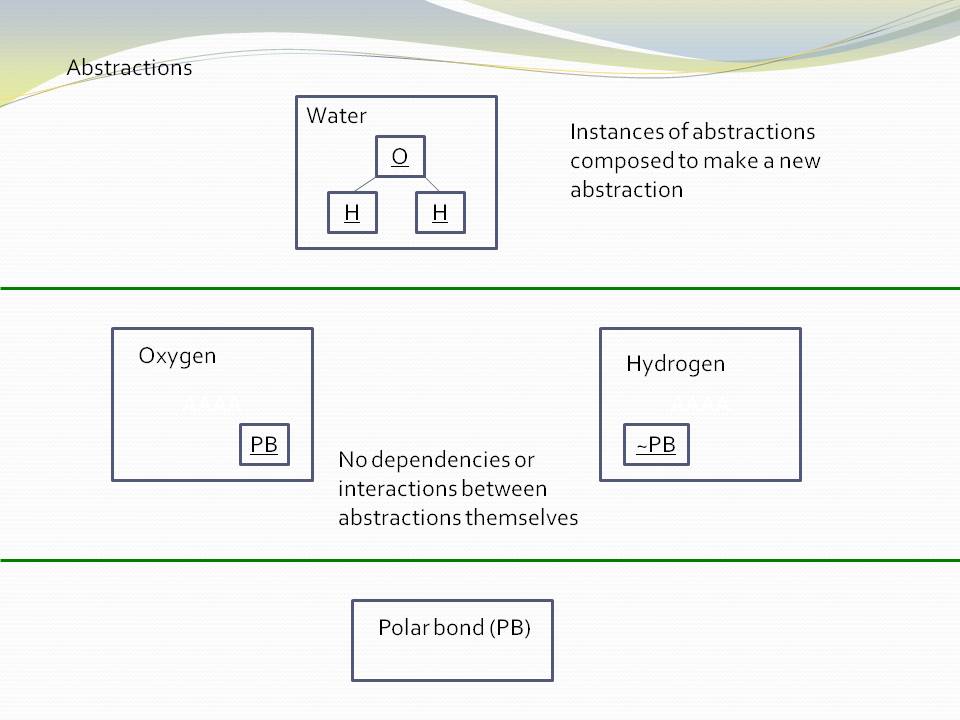

An abstraction is a learnable idea or concept. Once learned, it clicks into place like a light coming on, and it becomes an embedded part of your language. That then allows you to compose more specific abstractions using instances of it.

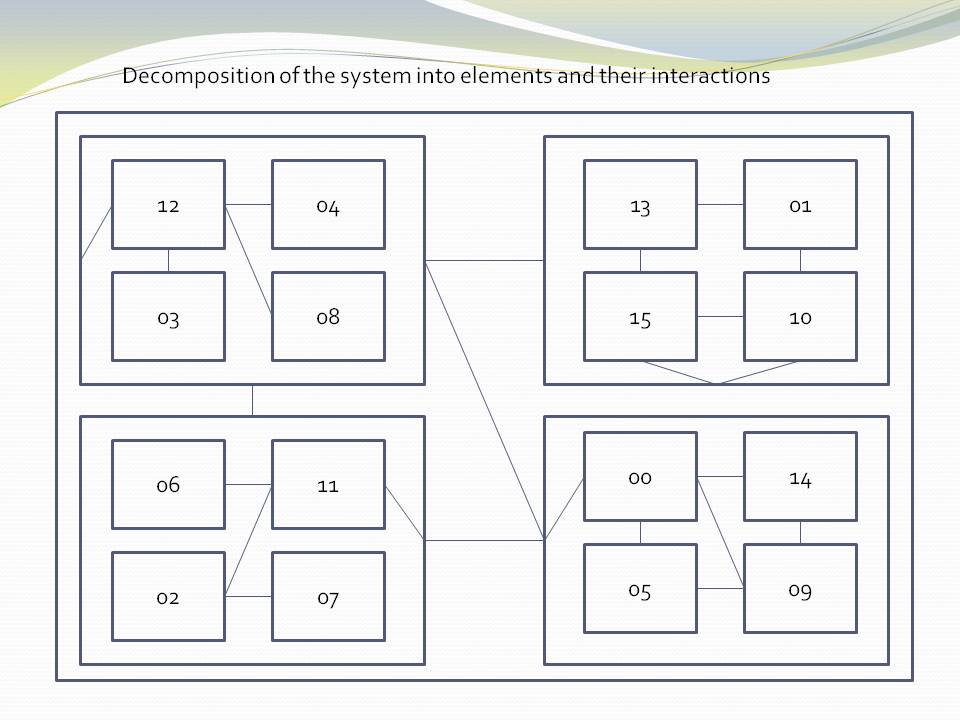

In conventional software development methods, a system is broken into smaller pieces that we call modules, classes, or components. These modules, classes or components tend to have a fixed arrangement with one-another (although an individual piece may be substitutable). This fixed arrangement leads to implicit collaboration with one another to form the system. They are like jigsaw pieces. Specific pieces fit together in a specific way to make a specific picture. The system is not explicit in itself, it is just the result of collaboration of all the individual parts. A jigsaw picture is not explicit, it’s just formed by the collaboration of all the parts.

In contrast to jigsaw pieces, abstractions are like Lego pieces. These pieces are general. Given a set of instances of Lego pieces, you cannot determine a specific arrangement like you can with a jigsaw puzzle. They can be composed in an infinite variety of ways. Any specific arrangement is an explicit artefact in itself, a new abstraction. The parts in this specific arrangement are not collaborating. What makes the system is the particular composition of them. It can be varied without changing the abstractions it is composed of. The specific arrangement is an abstraction in itself, just a more specific one than the Lego pieces it is composed of. That’s going to be how we build software using ALA.

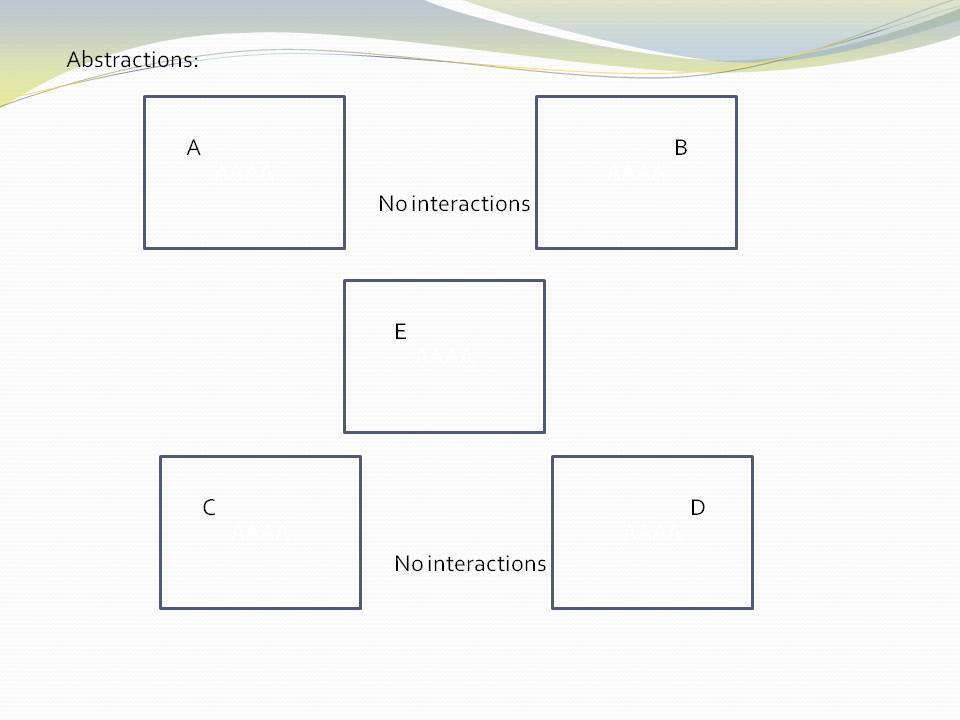

Abstractions have no knowledge of one another, nor the system that uses them.

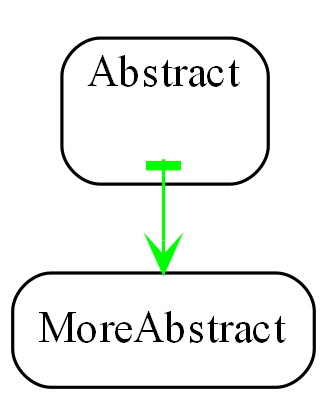

|

All implicit collaboration between modules in conventional architecture becomes explicit code inside a new abstraction in a higher layer in ALA. |

This first constraint means that the ALA equivalent of a module is an abstraction. The distinction is crucial. Modules that are not good abstractions only provide encapsulation. The term encapsulation means hiding details at compile-time, but not necessarily at design-time. Abstractions are what our brains use, so only abstractions hide details at design-time.

Abstractions are the only mechanism that provides design-time information hiding. When David Parnas coined the term information hiding he meant at design-time. Unfortunately there is a popular meme that information hiding means encapsulation, which only hides at compile-time. The same idea of design-time information hiding has other names such as Alistair Cockburn’s protected variations, and Robert Martin’s version of the OCP (open closed principle).

In the absence of direct computer language support for abstractions, ALA generally implements abstractions as source files (like modules in C). The file usually contains one class, but may contain a small number of classes, interfaces, enums, delegates, typedefs, functions, etc.

Inside an abstraction (source file), there are no relationship rules, or any organisational rules for that matter. It is all considered cohesive code. So internally, abstractions are small balls of mud.

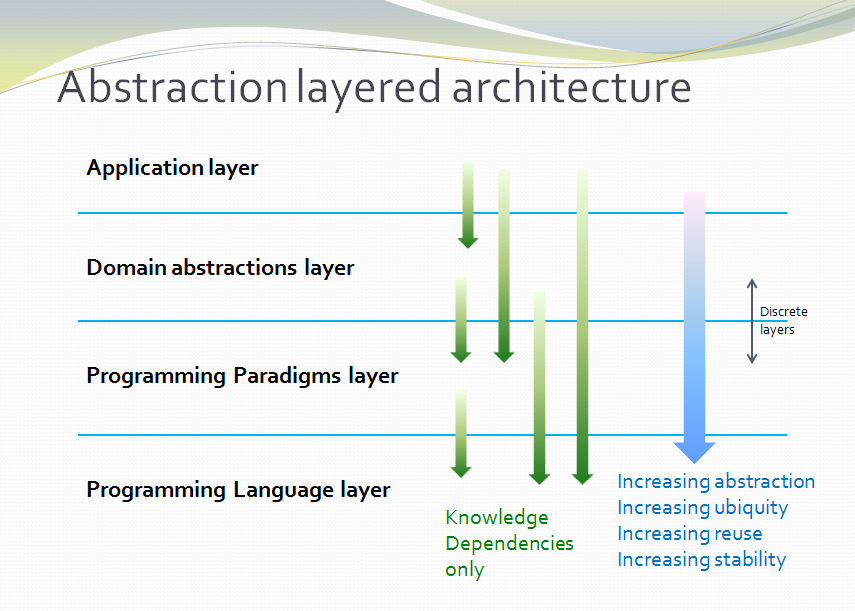

Charles Krueger pointed out that abstraction and reuse are two sides of the same coin. More abstract means more reusable. This makes sense since an abstraction means "commonality drawn from many instances".

More abstract also tends to mean more stable because a more abstract idea is drawn from more instances, and is therefore a more ubiquitous, more fundamental idea. The abstraction 'squareroot' has been stable for thousands of years despite the fact that new instances of its use occur every day.

Software libraries tend to be good abstractions because they were written without knowledge of what will use them. If we conceive of and write abstractions in our applications similarly, without knowledge of the code that will use them, we will also get good abstractions. However, we also need to build useful abstractions. This will require some knowledge of what sorts of applications we are wanting to build. We call that the domain, and the abstractions are called domain specific abstractions.

The only legal relationship is a dependency on a more abstract abstraction

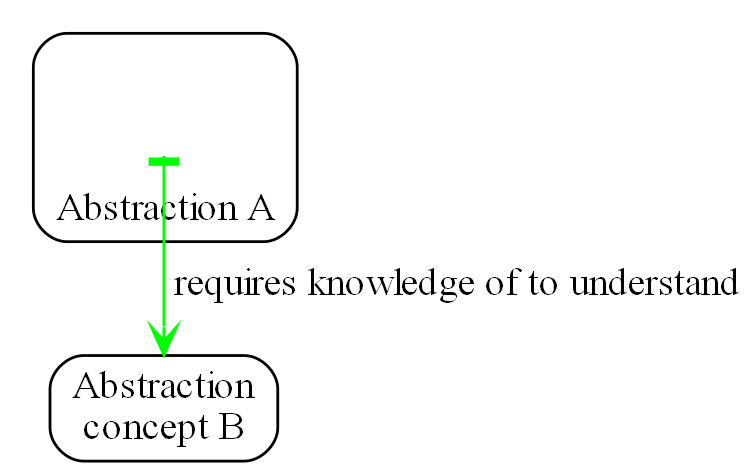

The second constraint is that dependencies must be on abstractions that are significantly more abstract (than the abstraction whose implementation has the dependency).

The word _dependency_ has many meanings in software engineering literature. See https://www.pathsensitive.com/2022/09/bet-you-cant-solve-these-9-dependency.html for a discussion on the meanings of word dependency. In this book we use the word _dependency_ for design-time (one abstraction depends on another at design-time, which is why we call it a knowledge dependency.) and also compile-time (one class or function, etc, depends on another at compile-time to compile).

When, in ALA, we wire two instances of abstractions, the two abstractions themselves will have no dependency on one another (because abstractions are design-time entities). The classes or functions that implement those abstractions will also have no dependency on one another (because classes/functions are compile-time entities). But the objects or executing functions will still have a dependency on one another at run-time (via an indirection of some kind). This is a run-time dependency between instances or objects or executing functions. When we use the word dependency throughout this book, we are not referring to these types of _run-time_ dependencies. We are refering to all _design-time_ and _compile-time_ dependencies.

In the linked article, _design-time_ dependencies (knowledge dependencies) are referred to as "Program logic / Proof semantics". _Compile-time_ dependencies are referred to as "Static Semantics". And _Run-time_ dependencies are referred to as "Dynamic semantics".

When we use a more abstract abstraction, for example, when a function that calculates standard deviation uses a function that calculates squareroot, the dependency at designtime is only on the concept of squareroot. As a concept, squareroot is stable and learnable. A dependency on a more abstract abstraction is a dependency on a concept that is relatively stable. Because of this stability, there is zero coupling between the code that implements standard deviation and the code that implements squareroot.

For this to work, the abstraction being used must be significantly more abstract than the one whose implementation code uses it.

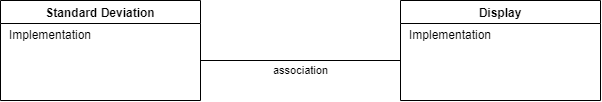

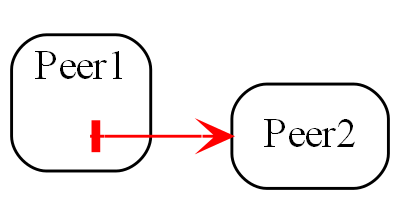

Communication between instances of peer abstractions

Seemingly, communication is needed between peer abstractions to make a system work. However, in ALA, a dependency from one abstraction on a peer abstraction is illegal. Even a dependency on the interface of a peer abstraction is illegal. For example, if the code implementing standard deviation were to, say, output the result directly to a peer module like a display, it would destroy the standard deviation abstraction. Even if the dependency is on the interface of the display abstraction, the code in standard deviation would still be coupled to a concept that is not more abstract. Standard deviation would no longer be an abstraction. It would no longer be easily learnable as a concept. Any code that used would not be readable in itself. Standard deviation would no longer be reusable without dragging display with it.

Furthermore, over time, the fixed arrangement between the standard deviation function and the display function is likely to lead to the display function providing specifically what the standard deviation function needs, and vice versa. So both functions would tend toward being specific parts of a specific system. They would just be collaborating modules, and not abstractions.

In ALA, abstractions do not communicate. Their instances do. The instances only communicate at run-time but for that to happen something else needs to instantiate them and connect them.

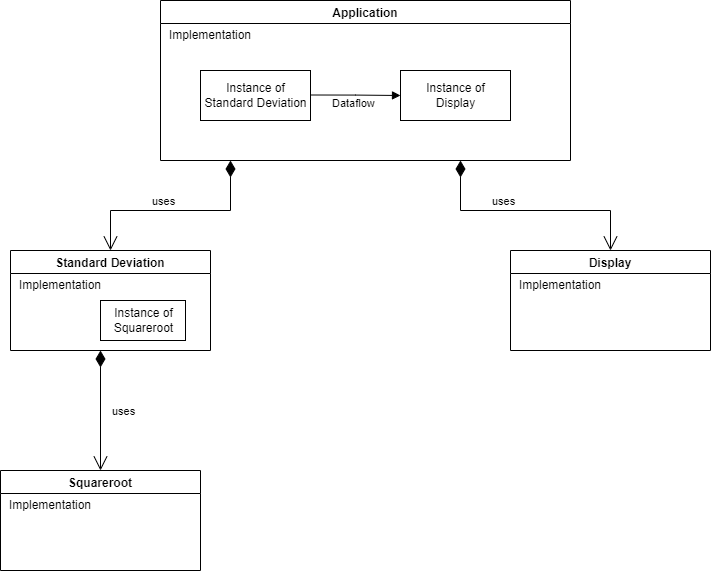

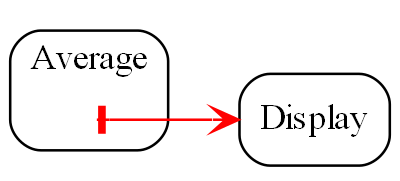

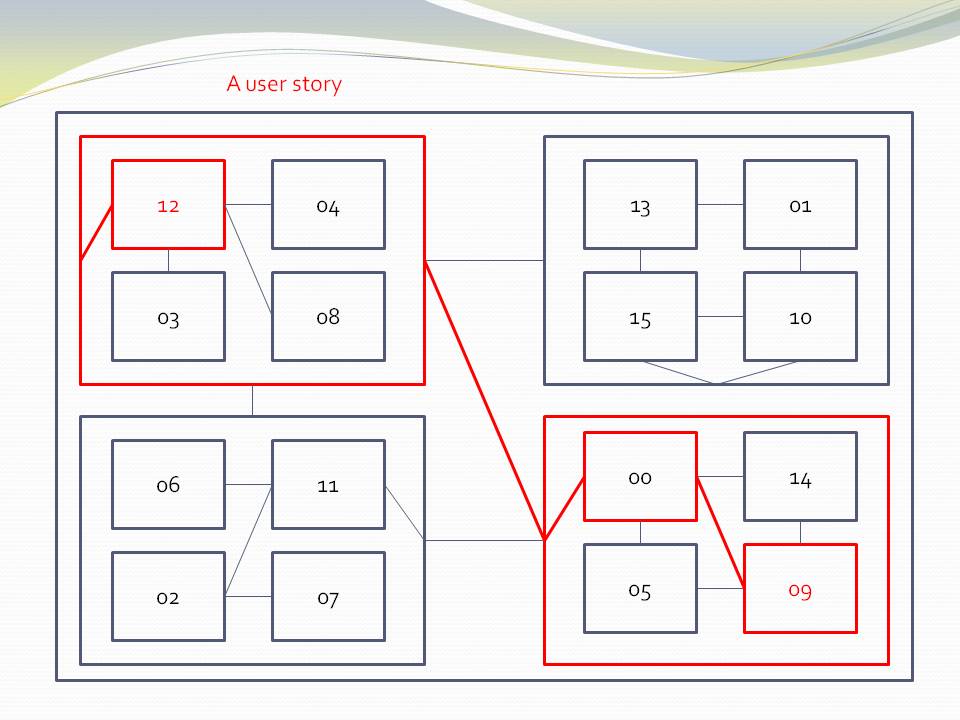

In ALA, there must be a more specific abstraction in a higher layer to represent the system consisting of an instance of a standard deviation abstraction connected to an instance of a display abstraction.

In this example, the higher layer abstraction called Application could just call the standard deviation function and then call the display function. But more commonly in ALA it creates an instance of the standard deviation abstraction and an instance of the display abstraction, and then arranges for these instances to communicate at run-time. The higher layer abstraction can be thought of as representing the user story consisting of an instance of standard deviation connected to an instance of a display.

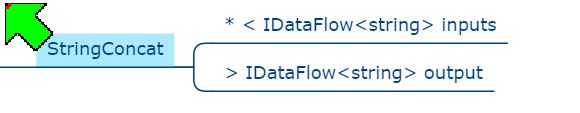

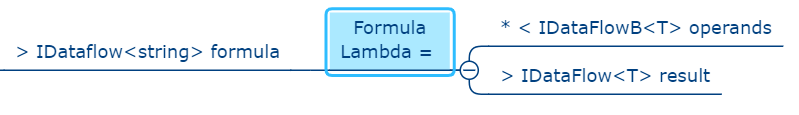

Classes with ports

In ALA we wont be restricting ourselves to pure functions. We will use classes as well. That’s because in ALA, when we need state, we consider abstraction to be more important than referential transparency (invariant behaviour over time.) Essentially in ALA we are reducing complexity (increasing analysability) by the use of zero-coupled abstractions, whereas in pure functional programming you are trying to reduce complexity only by removing time from the analysis, at least in the functions. Sometimes separating state from functions breaks otherwise good abstractions. An example would be if our standard deviation abstraction receives a stream of data and produces a running standard deviation result. In ALA, such an abstraction has it’s state (SumX, SumXX and N) stored with it, not passed into it. In other words, if state together with some methods makes a good abstraction, then we don’t break it. ALA is therefore highly object oriented. It is object oriented programming as it should have been.

Another example of the need for a class rather than a function might be our display abstraction. A display probably needs some configuration, for example to tell it how many decimal places to display. That should be a separate interface because its going to be used by a different abstraction instance, and at a different time (interface segregation principle). That kind of configuration is usually set once when the abstraction is instantiated, so the configuration state should be stored inside the display abstraction.

ALA is object oriented, but its object oriented in a much more disciplined way than conventional object orientation. That’s because peer classes may NOT have associations. In other words, an object’s communication with another object at run-time may not be implemented by one class having knowledge of another at design-time. Not even on an interface of a peer class.

There must be a more specific abstraction in a higher layer that instantiates the two classes and arranges for them to communicate. This is more than dependency injection. Dependency injection, where one class knows about the interface belonging to another peer class, or a set of substitutable peer classes, is illegal in ALA.

Now we could have the more specific abstraction in the higher layer handle the communication itself by simply calling methods on each of the classes. For the example above, the user story abstraction in the higher layer could call a standard deviation method to get the result, and then call a display method to display it. But that would mean the user story abstraction would be handling the data itself, and furthermore would be implementing the execution model. We don’t particularly like that. We prefer the user story abstraction’s job is just to compose the instances of the two peer abstractions, in a declarative style.

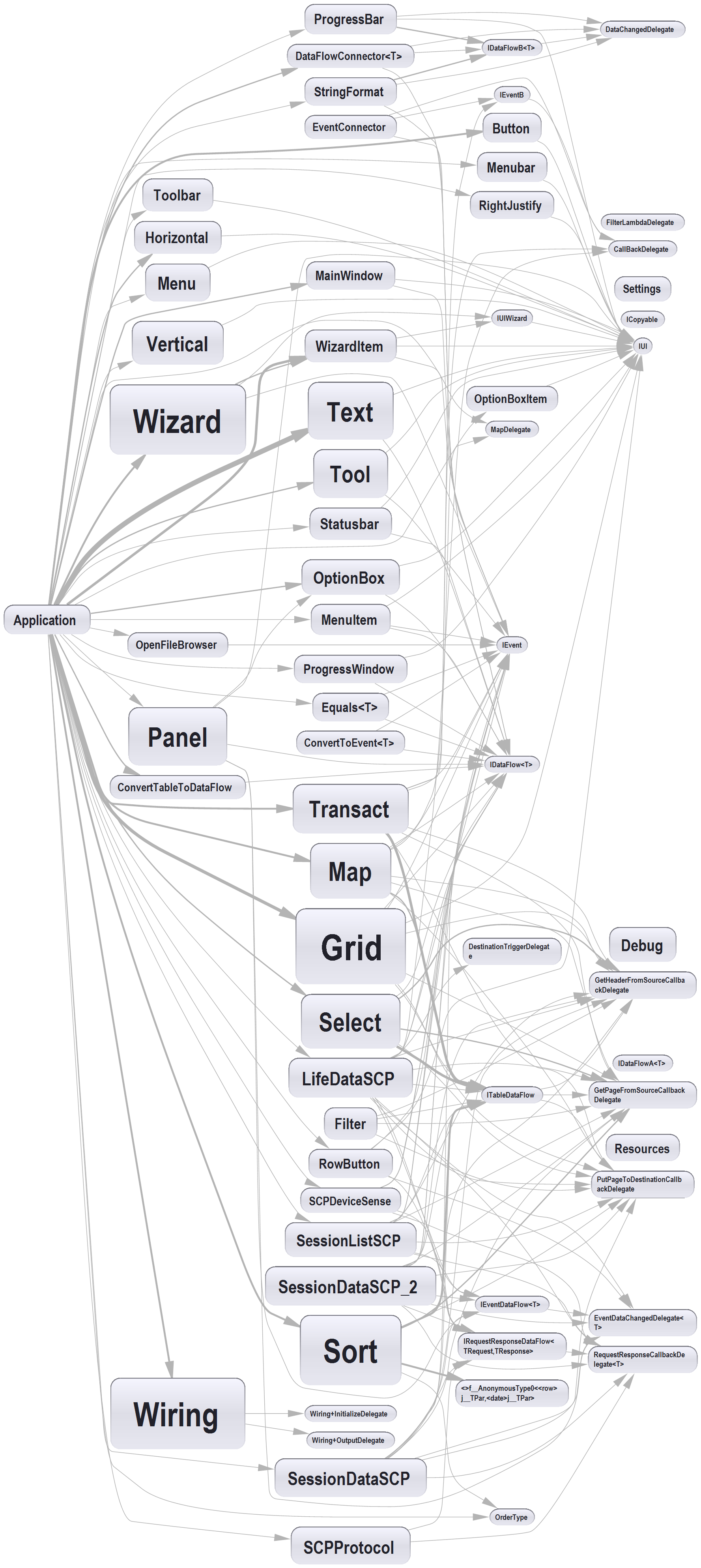

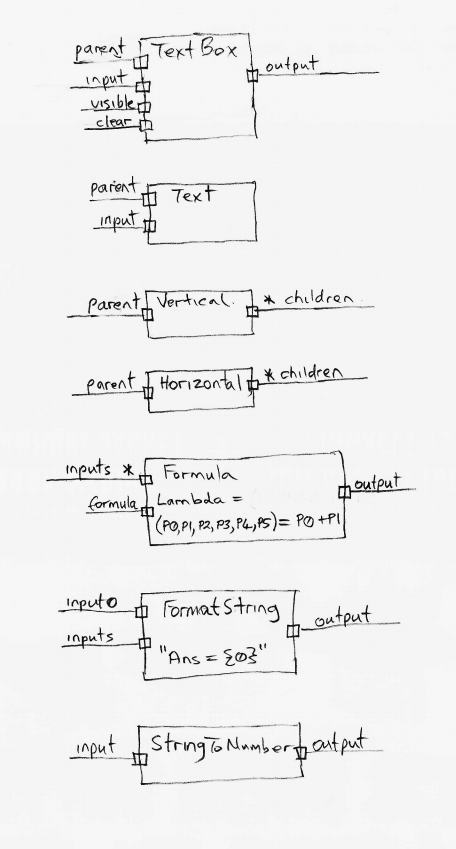

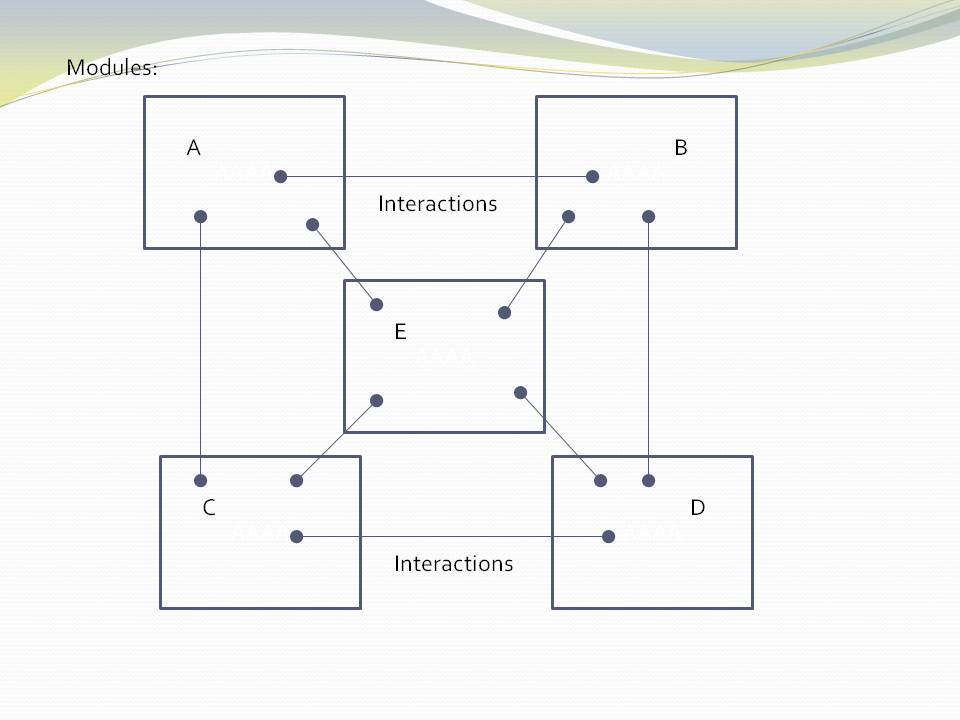

To do this, we build the classes with ports. Syntactically, a port is either a field in a class of the type of an interface, or just implementing an interface, which we already do a lot in conventional code. What makes it a port is the abstraction level of the interface. It has to be fundamentally more abstract to meet ALA’s second constraint. It needs to be a compositional idea. A compositional abstraction is usable by many classes. Ports therefore allow instances of classes to be composed in an infinite variety of ways.

We then simply wire the instances together by their ports. For example, we could make a standard deviation class with an output Dataflow port and a display class with an input Dataflow port. Instances of them can then be wired together by the user story abstraction in a higher layer.

It is important that ports use a significantly more abstract interface that comes from an even lower layer. In ALA, we call these more abstract interfaces programming paradigms. For example, the Dataflow programming paradigm can be used anywhere we need to pipe data into or out of an abstraction. That’s a pretty abstract and reusable idea.

So now, while the standard deviation and display abstractions know absolutely nothing about each other, they both know about the dataflow programming paradigm, and so their instances can be wired to communicate directly at run-time.

Now you may be thinking that the two peer modules are still collaborating on the meaning of data flowing between them. No, that is knowledge held in the Application abstraction above them which wired them together.

Classes can have multiple ports. Ports can use different programming paradigms, not just data flow. For example, the display class could have a second port of type UI. The UI programming paradigm is a different compositional idea. It allows laying out the user interface. A wiring of UI ports means one part of the UI is displayed within another part. For example, an instance of display could be put inside an instance of a panel.

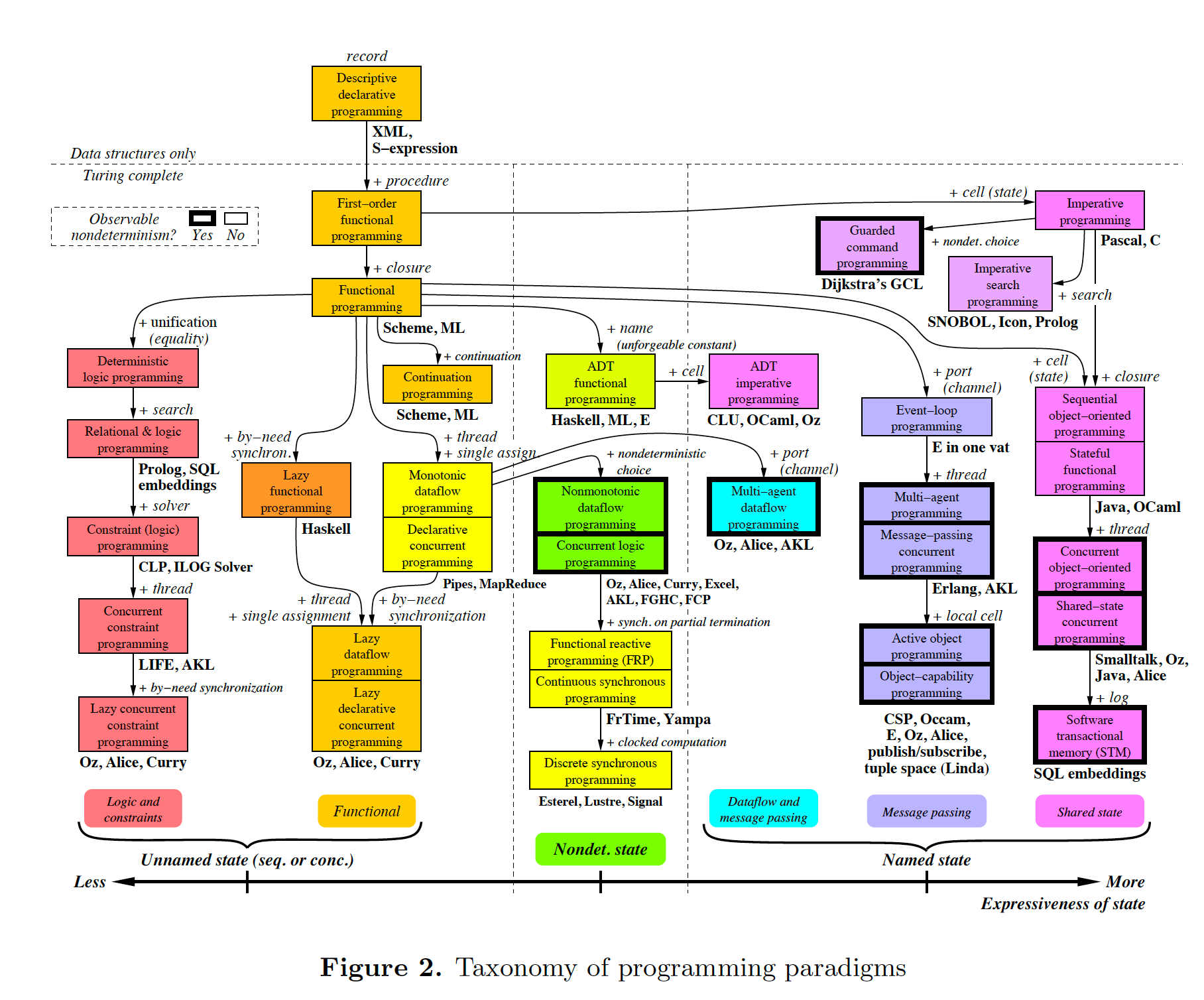

Other types of programming paradigms are invented as we need them in order to meaningfully express requirements. Think of programming paradigms as compositional abstractions. They are abstractions that provide the meaning of composing, such as dataflow, UI layout, events, data schemas or others you can invent.

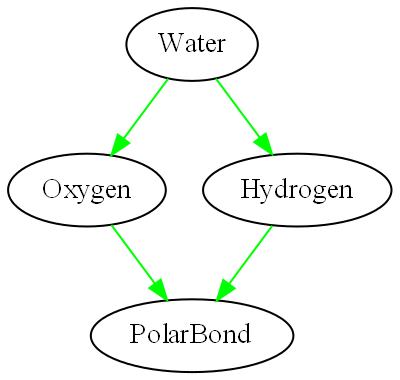

All dependencies are knowledge dependencies

A dependency on an abstraction in a lower layer means you need to understand the concept the abstraction represents at design-time. It is a knowledge dependency. You could not understand the code that calculates standard deviation without first knowing about the concept of squareroot. However, you do not require knowledge of the concept of a display, so that’s why that dependency is illegal.

Going one layer up, to understand the code in our example user story abstraction requires knowledge of both the concept of standard deviation and the concept of display. It is therefore fine for the user story abstraction to have dependencies on both and compose an instance of standard deviation and an instance of display.

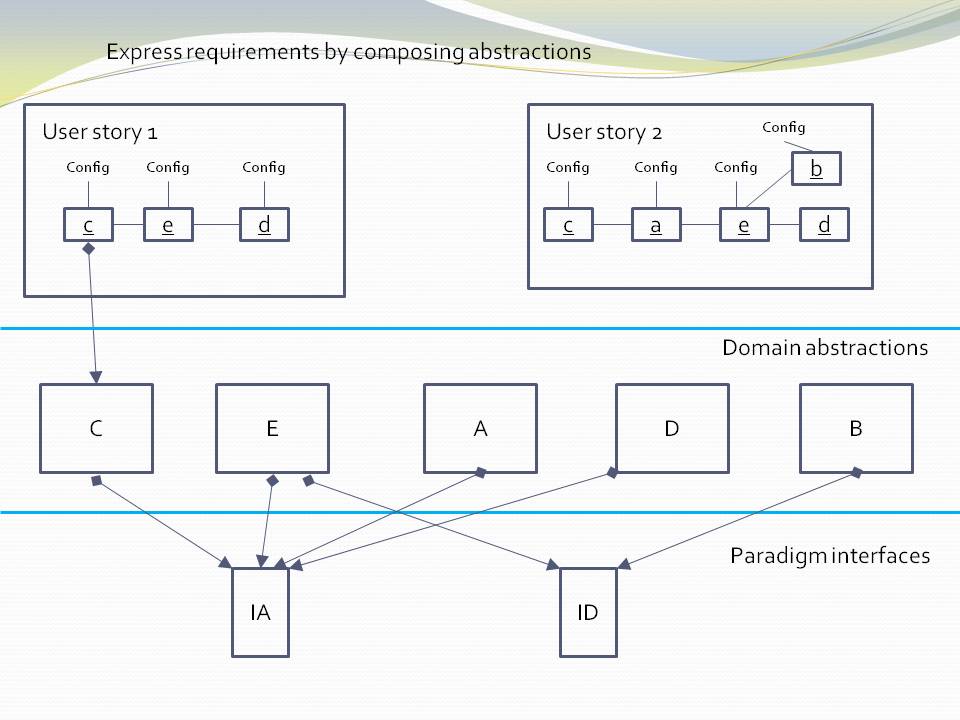

Abstractions that are more abstract become part of your language. We take this for granted when we use library abstractions, such as squareroot. In ALA, all lower layers make up the language for the next higher, more specific, layer until we are able to express the application’s user story abstractions.

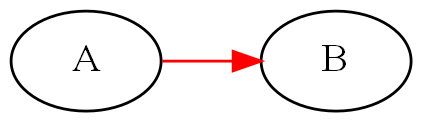

Good and bad dependencies

This is an alternative way of viewing ALA’s dependency constraints.

Conventional code contains both good and bad dependencies. Good dependencies are when we use abstractions, such as when we use a library class.

There will typically be very high numbers of bad dependencies in conventional applications. These bad dependencies are usually put there for different modules of a program to inter-communicate. They are also used to break a large module up into smaller modules - hierarchical decomposition. These smaller pieces may start with a stated functionality, but they are pieces specific to the module they are used by and therefore actually more specific than the module.

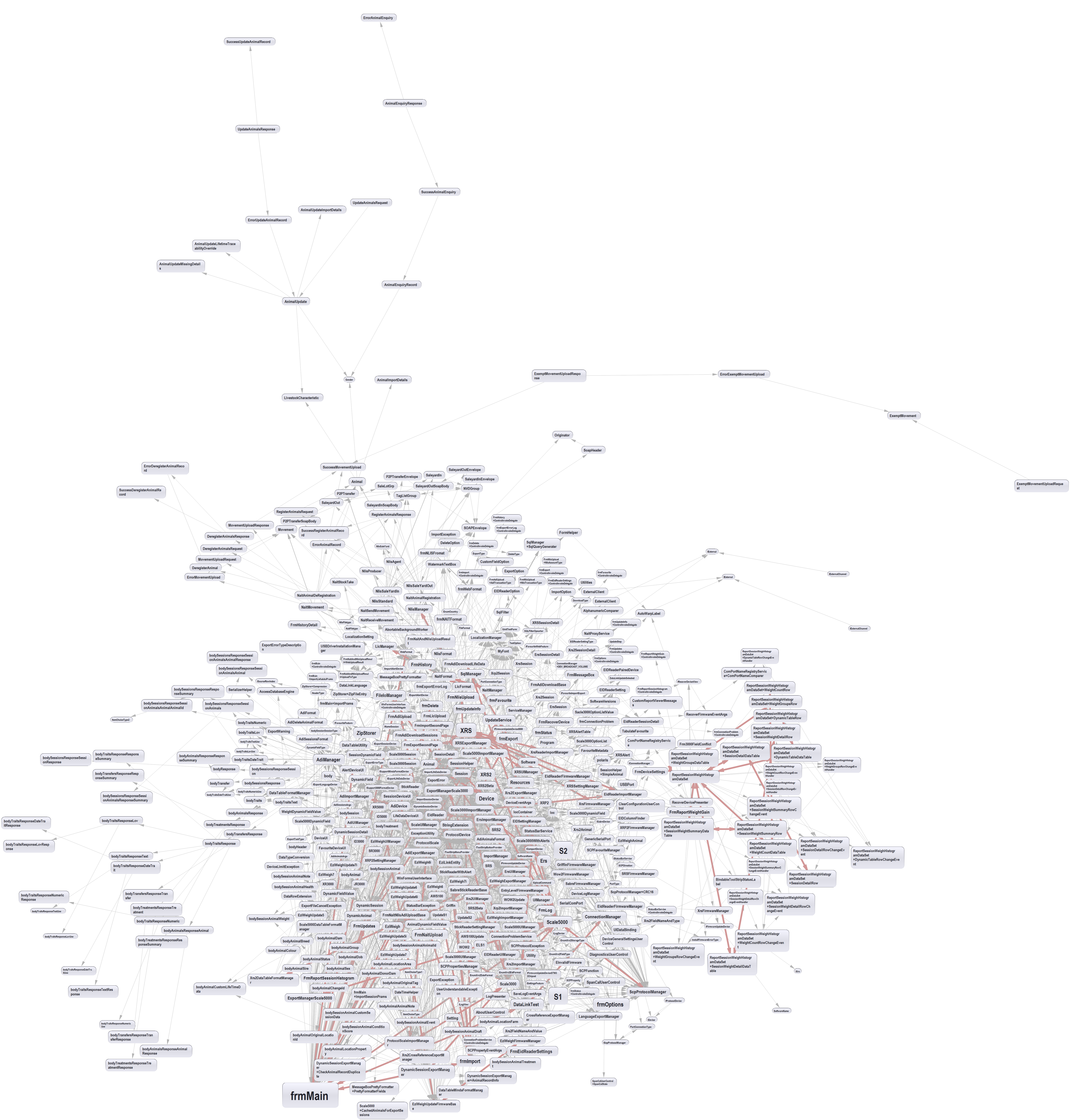

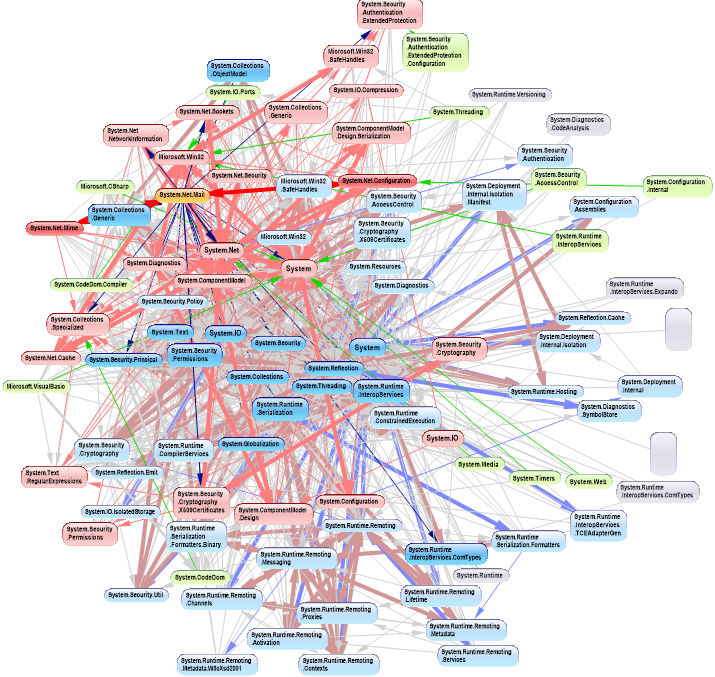

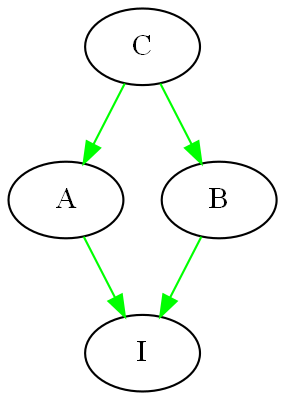

Bad dependencies make the dependency diagram look like the one on the right. It is an actual dependency graph of a conventional application that was completely cleaned up by ALA. My partner says it looks like two ferris wheels engaged in a mating act. Even if we manage to avoid cyclic dependences, there are still typically many bad dependencies.

In ALA, all bad dependencies are illegal. Normally in conventional code we don’t distinguish between good and bad dependencies. We consider them all to be necessary if the system is to work. But it turns out that systems can be built using only good dependencies.

Good dependencies are not just good - they are really good. We want as many of them as possible, because then we are reusing our abstractions. Bad dependencies are not just bad - they are really bad. They cause a growing tangled network of complexity.

Since bad dependencies are illegal in ALA, how do parts of the system communicate? Well, each bad dependency simply becomes a line of code completely inside a higher layer abstraction. That line of code connects two instances of abstractions. There it is cohesive with other lines of code that connect other instances of abstractions that together make a whole user story.

If you think about it, circular dependencies come about because there is circular communications. Circular communications is perfectly valid. When you stop representing communications with dependencies, and start representing them with wiring code, circular wiring makes perfect sense. When you use only good dependencies, circular dependencies, and the whole dependency management problem just goes away.

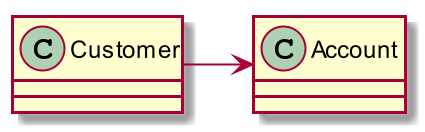

Nearly all relationships in a typical UML class diagram are bad dependencies. In ALA, the only legal UML class relationship is composition (filled diamond arrow), and then only if the class being used is more abstract (in a lower layer). Now, when you use an abstraction, you always refer to it by name, not use an arrow on a diagram. You wouldn’t draw a line on a diagram when using a library function such as squareroot - you would just use squareroot by name as if it’s part of your language. Similarly, you wouldn’t draw 'instantiates and uses' relationships in an ALA application on a UML diagram. So, it turns out that if you drew a UML class diagram of an ALA application, you would just get disconnected boxes sitting in space. There would be no lines at all, They would be arranged in layers, as described below. The UML class diagram is completely redundant in ALA. In fact the UML class diagram, by encouraging bad dependencies between would-be peer abstractions, has probably caused more damage to software than any other software engineering meme. UML class diagrams are evil.

Metrics

Unfortunately, common metrics in use today do not understand abstractions. For example, CBO (Coupling of objects), (which, despite its name, is the total number of classes with dependencies to or from a given class) does not distinguish between good and bad dependencies. It just counts them all, making the metric completely useless.

ALA recognises the importance of using abstractions and composability in dealing with complex systems. If metrics don’t also take into account this importance, they cannot work well.

A common problem when calculating CBO is whether or not to include library classes. Intuitively we know that library classes are abstractions. We see them as part of our programming language. So we feel they should not be included in the metric. The same should apply to all good dependencies - those that are dependencies on the knowledge of a concept in a lower layer. If CBO counted only bad dependencies, it would be an extremely useful metric. Perfect software would require the metric to be zero.

If the CBO metric should first identify all potential abstractions, and then identify their abstraction levels relative to each other. Since abstraction and reuse are two sides of the same coin, Robert Martin points that we can use the number of uses (dependencies) on a given class as an estimate of its abstraction level. In this way it may be possible to take some bad legacy code and and suggest which classes should become abstractions at which levels. If the programmer agrees with the tool, then he can proceed to refactor out all the bad dependencies using ALA. This will require intrducing new specific abstractions at a higher layer in which put wiring code for each bad dependency. That in turn will require the invention of composition abstractions (interfaces) in a lower layer to allow the creation of ports on the classes that had the bad dependencies.

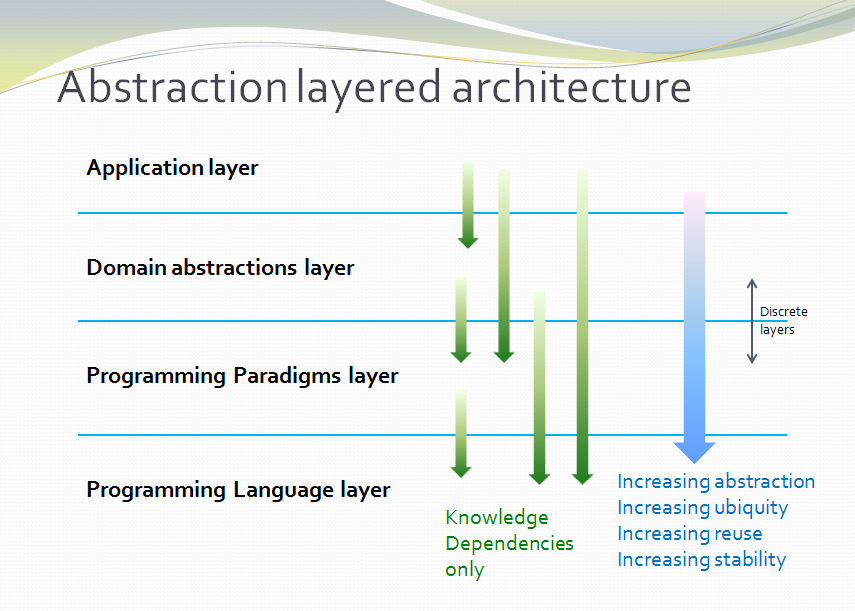

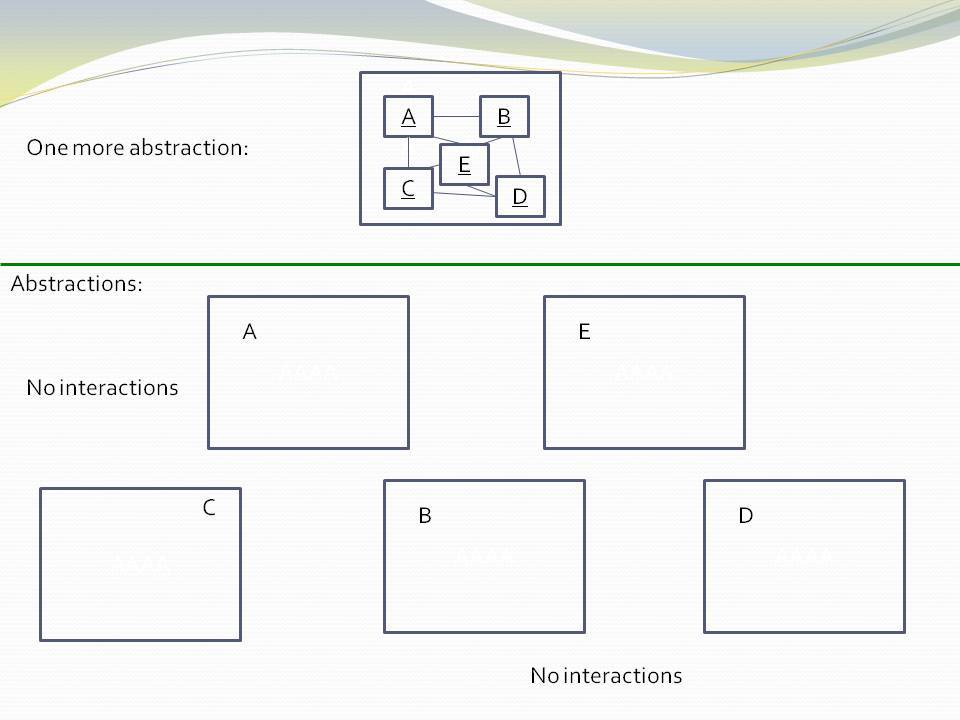

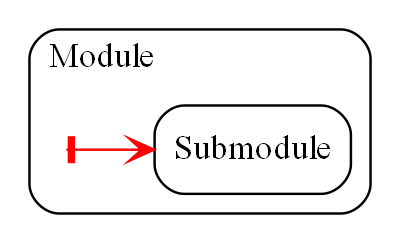

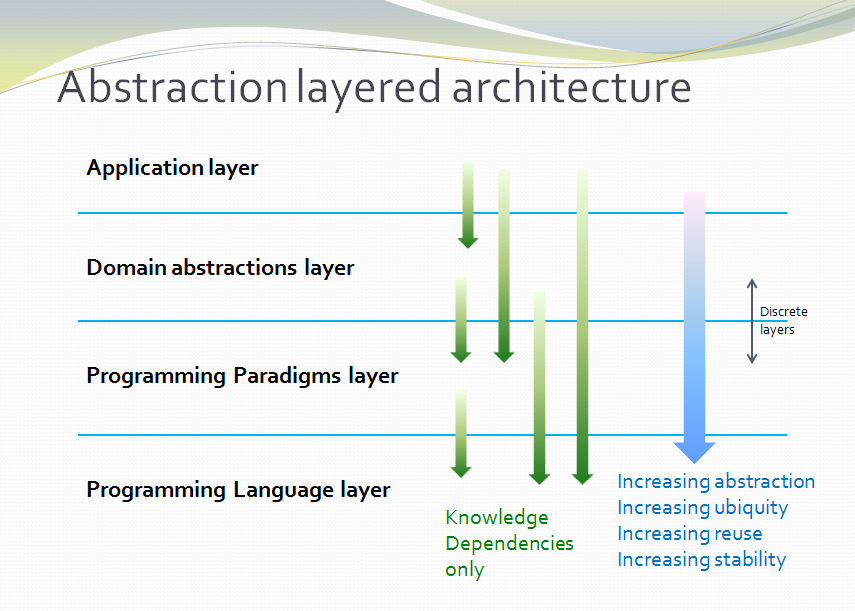

Emerging layers

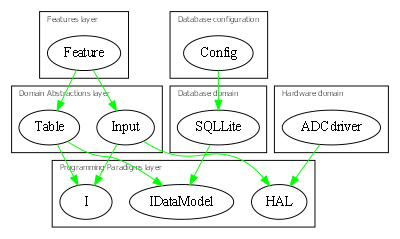

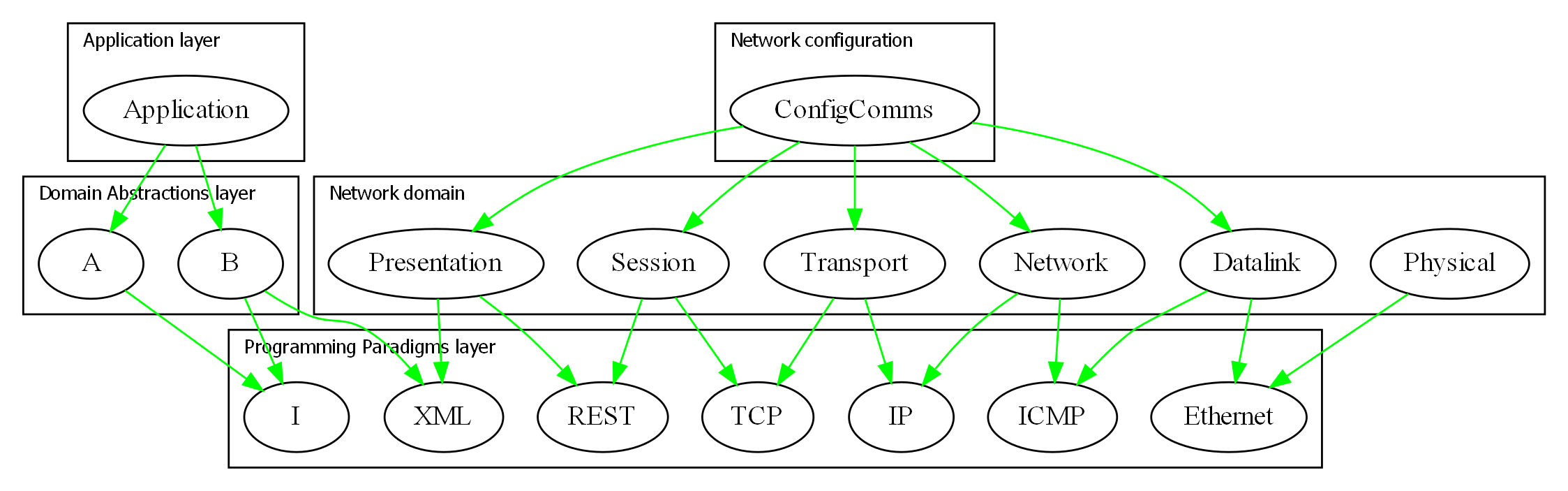

Because of the constraint that an abstraction that is depended on must be significantly more abstract, abstractions form layers. This gives the architecture its name: abstraction layered architecture. We give the layers names that reflect the types of abstractions that tend to go in them - application layer, domain abstractions layer, programming paradigms layer.

Each layer becomes a folder and a namespace in the implementation. This makes it very easy to know how to arrange our source files. The folders for each layer are not nested.

For large applications, another layer called the features layer or user stories layer comes into the picture between the application and domain abstraction layers. A specific application abstraction in the top layer then just composes a set of features or user stories needed in that specific application, and sets up any communication that may be needed between them.

There is no 'hierarchical' or 'nested' structure in ALA. In other words, abstractions cannot be contained within abstractions. There is no analog of a sub-module or sub-component, no such thing as a sub-abstraction. Abstraction layers replace hierarchical containment. This is because lower layer abstractions must be visible for reuse, not hidden inside an encapsulation hierarchy.

Once you have learned the concepts of the abstractions available in lower layers, it is easy to read and understand code in higher layers. Reading a line of code that uses an abstraction by name is like reading any other line of code. A good abstraction is when you don’t need to follow the indirection and go and read the code that implements the abstraction. For example, when we see a use of squareroot, if our brain has already learned the concept of squareroot once, we can concentrate on the code that is using the squareroot. We do not have to change context and go and read the code that implements squareroot. ALA is about achieving that level of readability for every single dependency in the entire application.

All abstractions must be small

This third constraint prevents us from conforming to the first two constraints by simply putting everything into one big ball of mud abstraction. That’s obviously not desirable, so we need this constraint to force us to create abstractions from which we can compose instances to make our application.

Abstractions are internally highly cohesive, which means that all code inside them is inter-related. Internally they are a small ball of mud. If that highly interrelated code is to be understood, it needs to be small.

A rule of thumb is around 100 to 500 lines of code. If the code is in diagram form (which will often be the case for reasons we will explain later), we should limit the size to 100-500 nodes plus edges.

If an abstraction contains more than 500 lines, it is starting to get over the brain limit for other programmers to understand. If abstractions average less than 100 lines of code, we will likely have more abstractions than we need to, and burden ourselves with an unnecessarily high number of them to learn. The sweet spot, which relates to the size of our brains, is somewhere in-between.

Other emergent patterns

ALA emerges many other patterns and properties. Many of them are already known about in software engineering, which is not surprising - such things as DSLs (Domain Specific Languages), Dependency Injection, Composite and Decorator patterns, monad like composition, etc.

There are often subtle but important differences. For example, wiring instances of abstractions in ALA uses dependency injection, but you can’t use interfaces that are specific to the classes being wired.

Another example is the observer pattern (publish/subscribe). It can be used to achieve calls going up the layers at run-time in ALA, but may not be used between peer abstractions in the same layer. If they are in the same layer, the subscriber’s reference to the publisher would be an illegal dependency. A line of code in an abstraction in a higher layer must do the subscribing, which is effectively what the wiring pattern described below is.

Inheritance is not used in ALA. We only use composition because inheritance breaks abstractions.

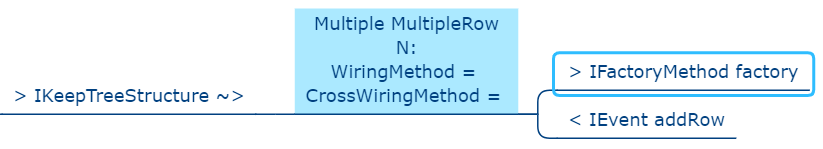

Wiring pattern

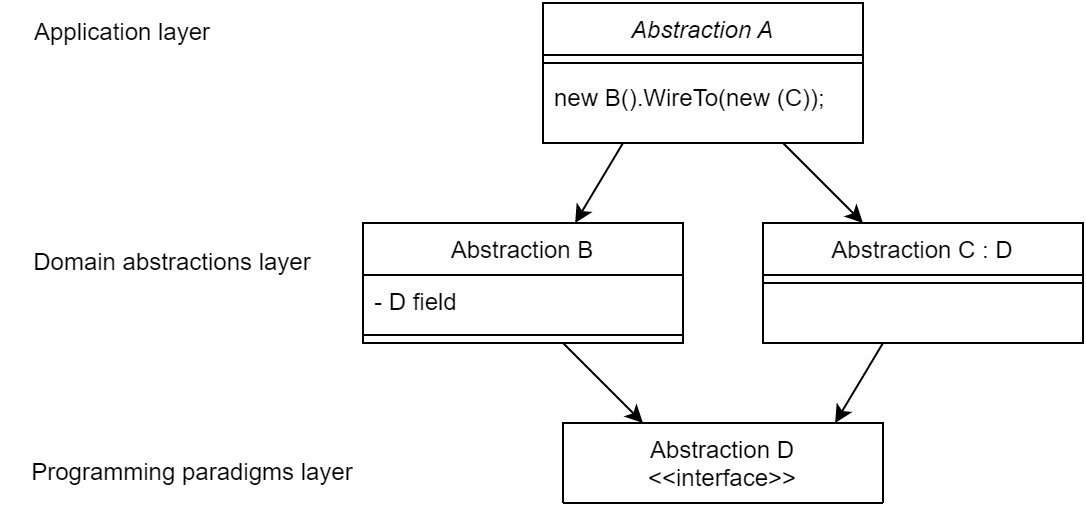

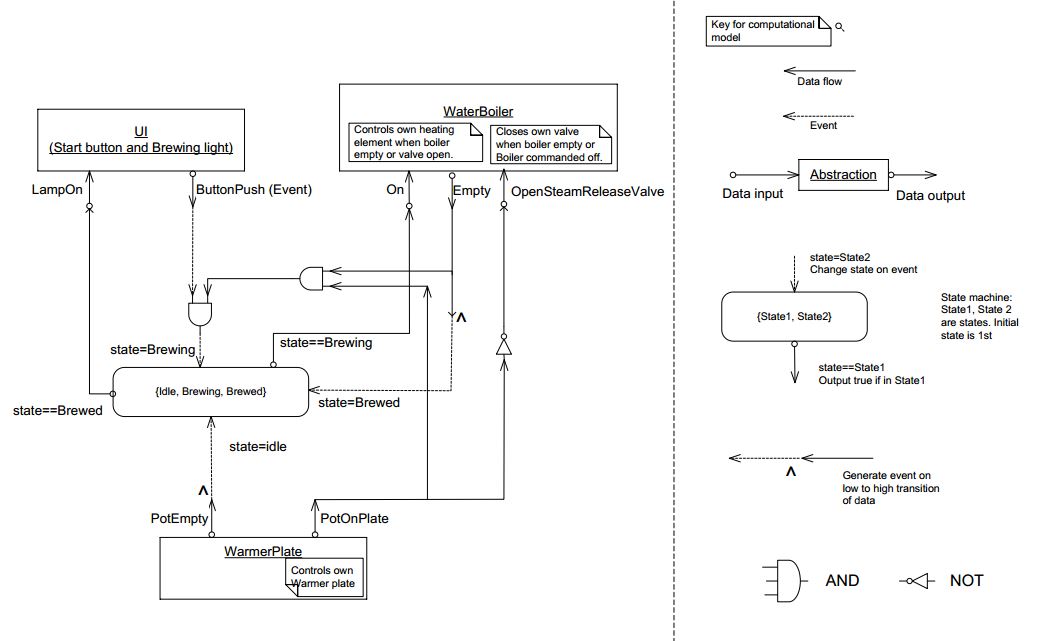

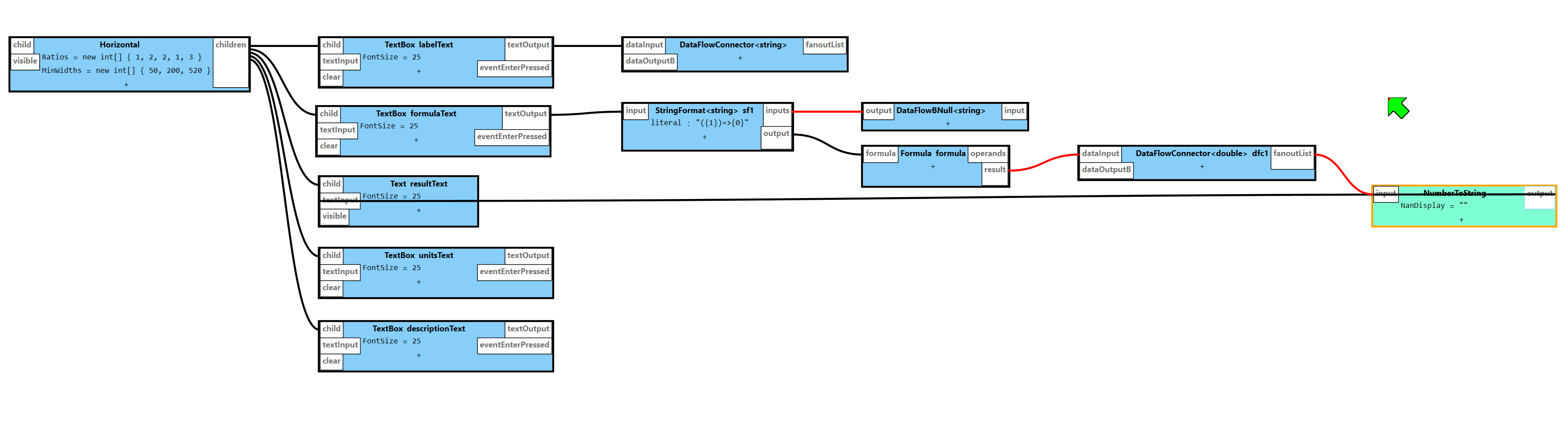

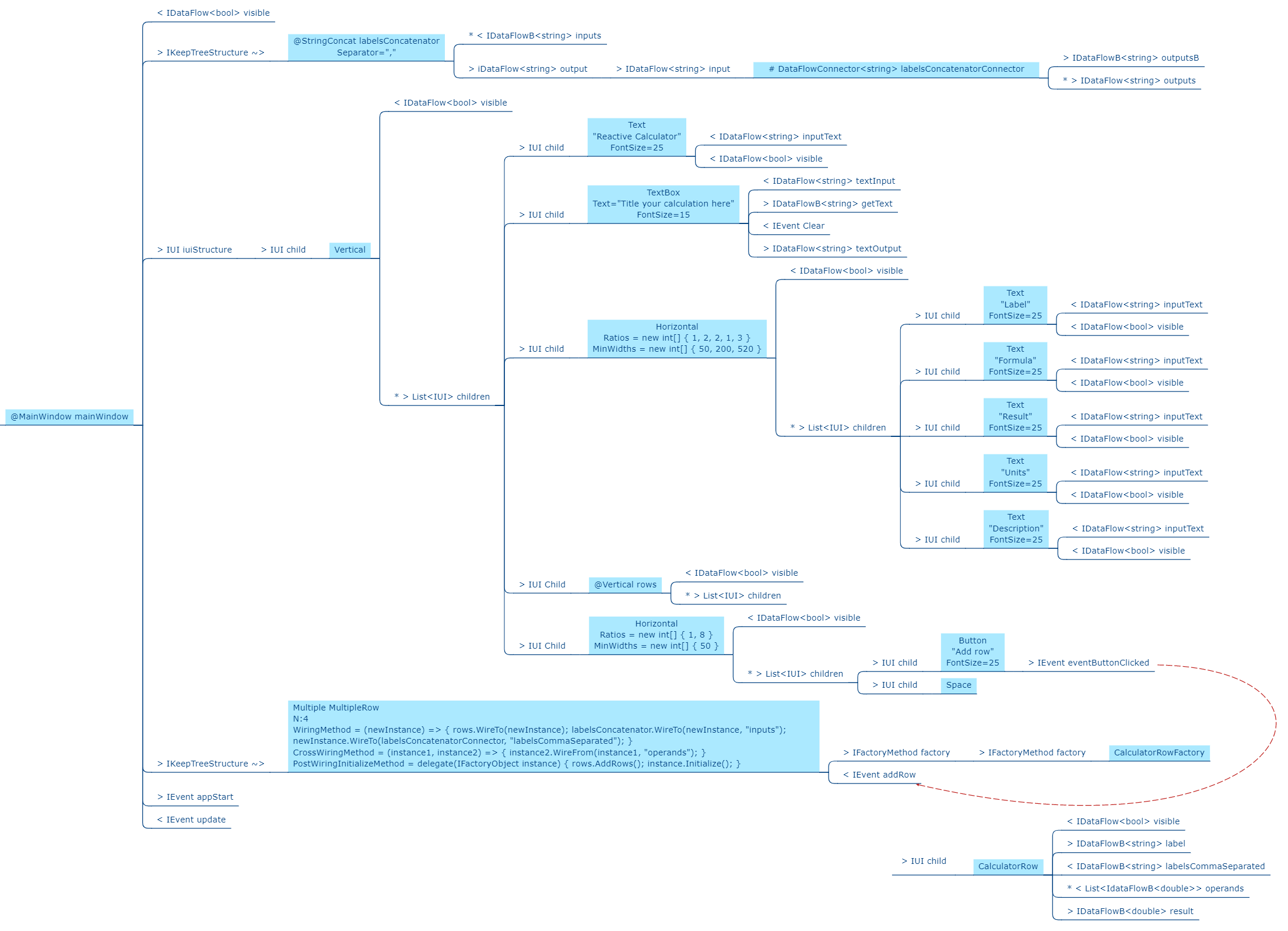

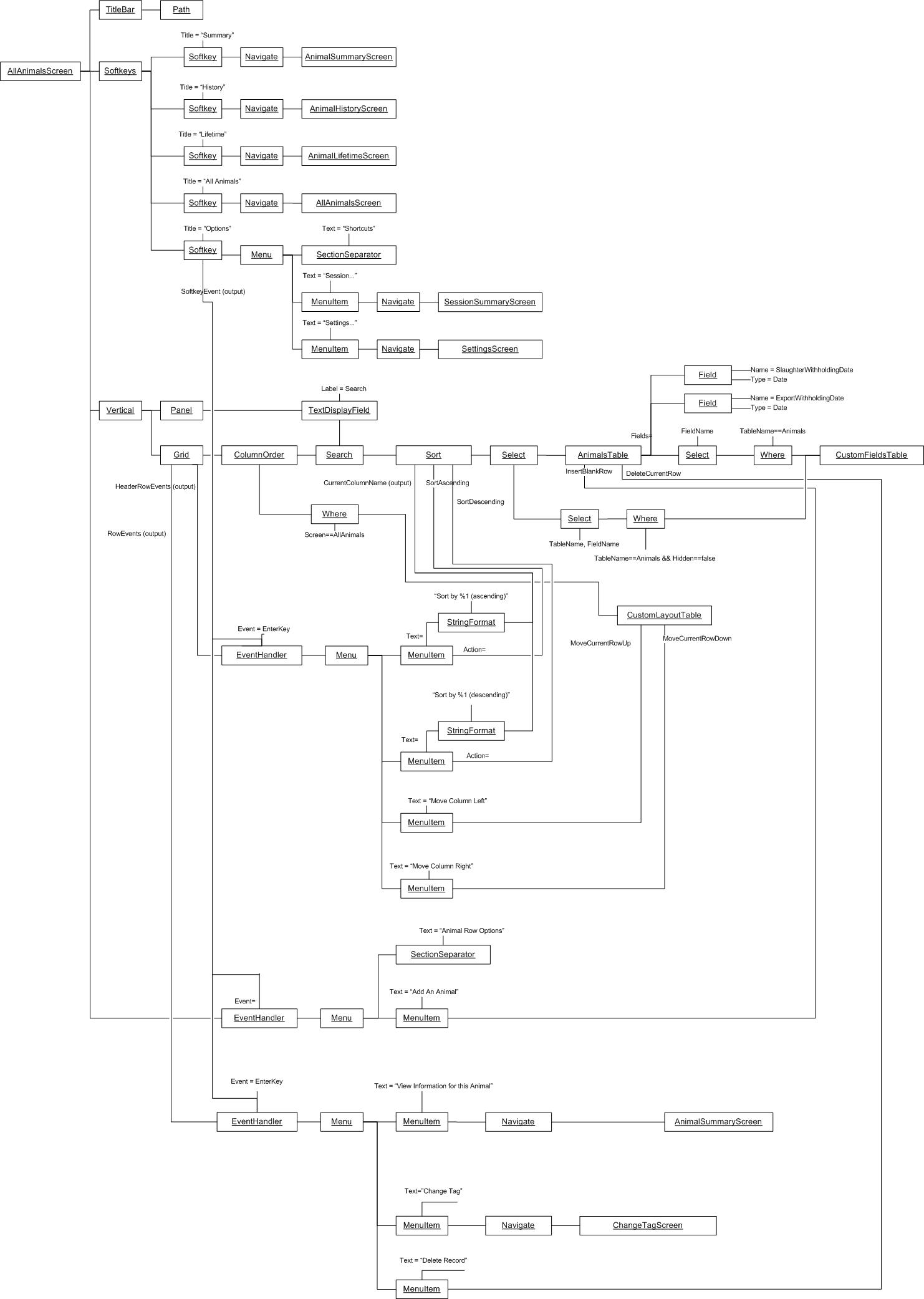

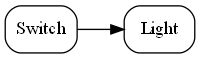

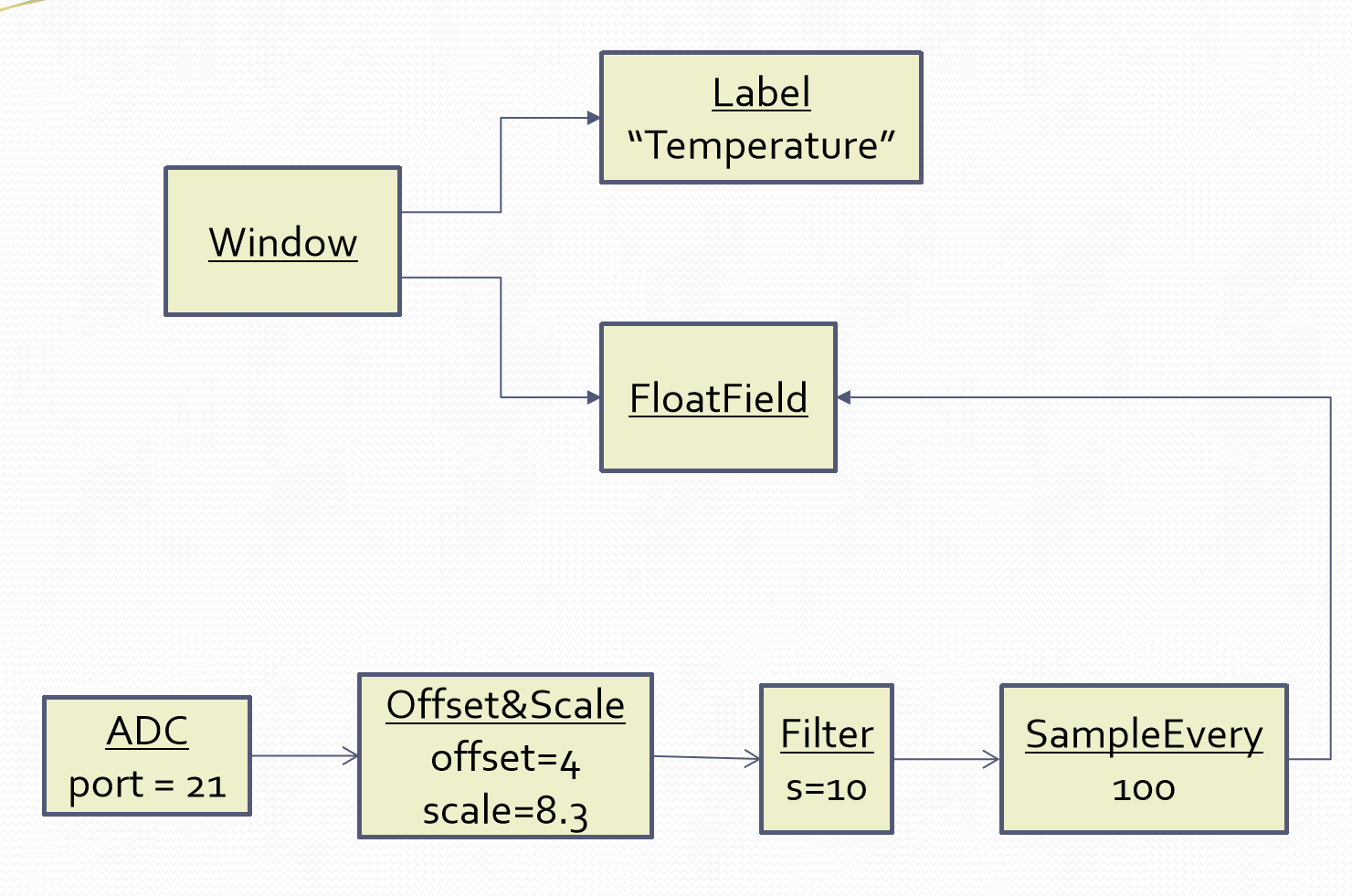

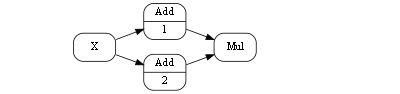

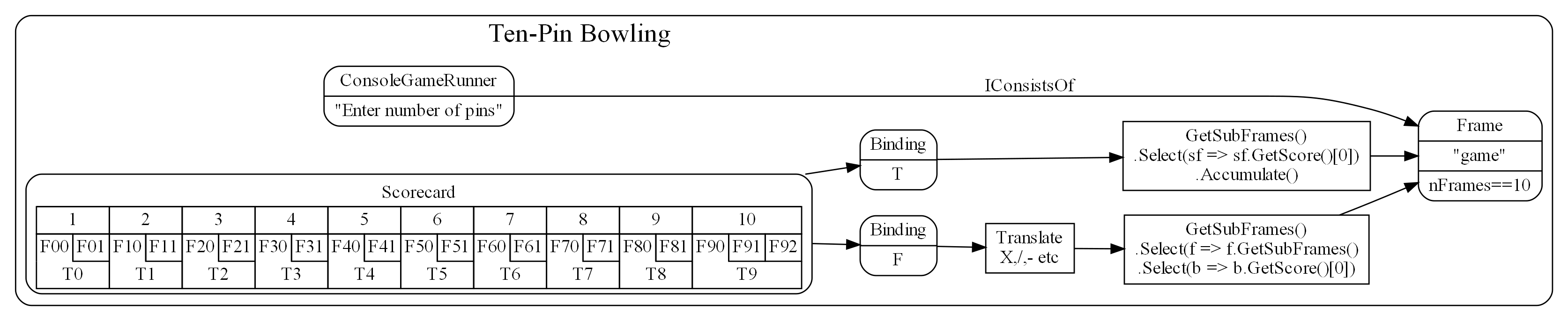

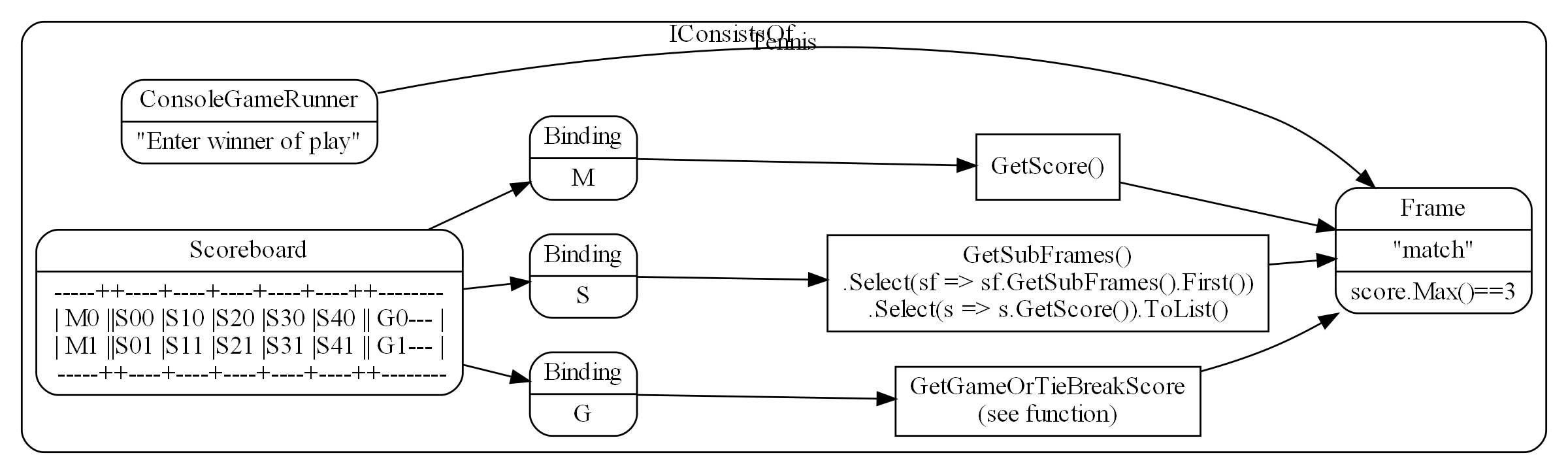

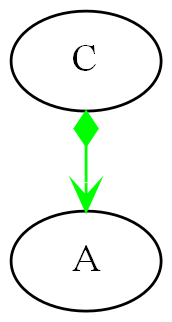

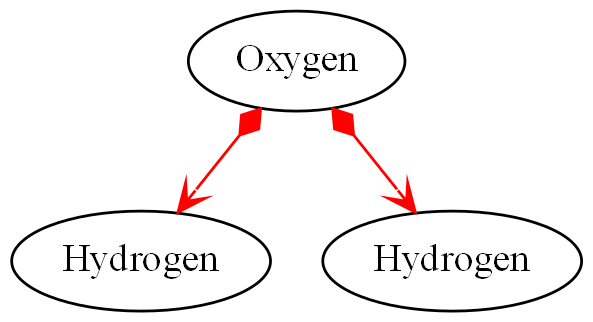

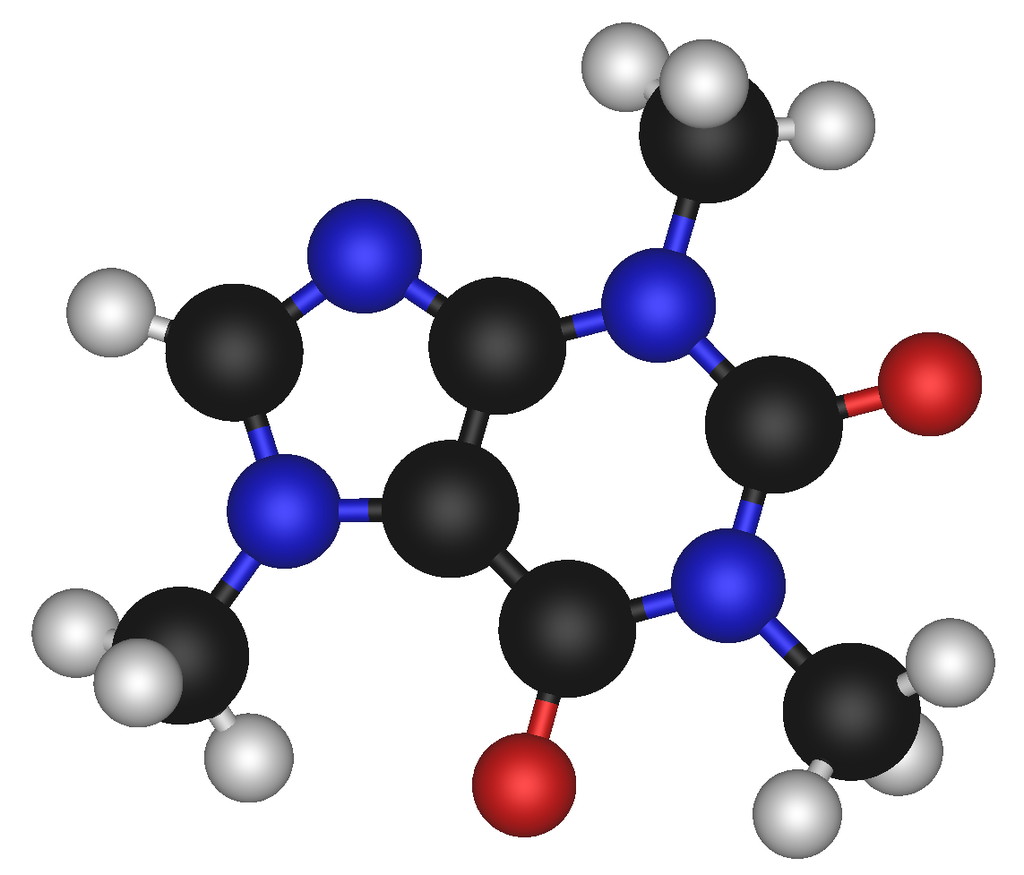

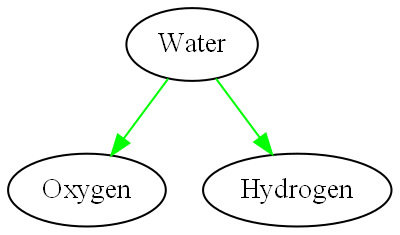

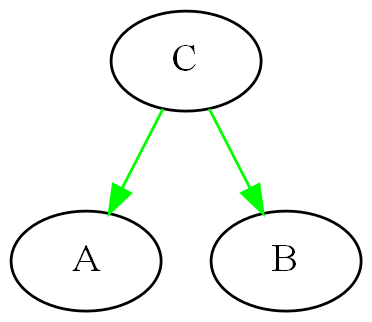

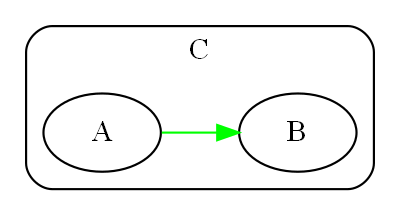

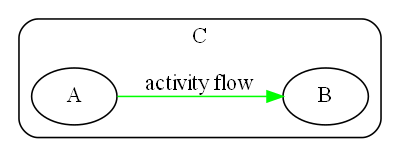

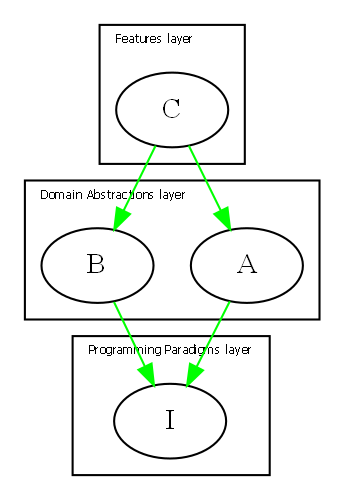

I use the wiring pattern shown on the right frequently to build ALA applications.

Abstraction A in the application layer has legal dependencies on abstractions B and C in the domain abstractions layer. B and C have dependencies on D in the programming paradigms layer.

D is a programming paradigm interface. This interface is not owned by B or C. It is its own abstraction representing a compositional idea, such as Dataflow or UI layout.

B and C have ports of type D. B’s port is implemented as a field of type D, and C’s port is implemented by implementing D’s interface.

A can create an instance of B and an instance of C and wire them together because they have compatible ports. Wiring causes the instance of C, cast as the interface, to be assigned to the field in B.

While abstraction B and abstraction C know nothing about each other, the instances of B and C can communicate with each other at run-time because they both know about the programming paradigm interface, D.

A tiny example

Every chapter has an example, and we do the same here in the summary. Unlike most pedagogical sized examples, these examples progressively become non-trivial. Yet because of ALA’s power, they remain uncomplicated and easy to understand.)

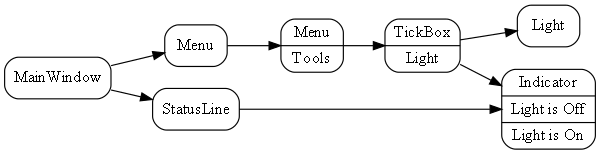

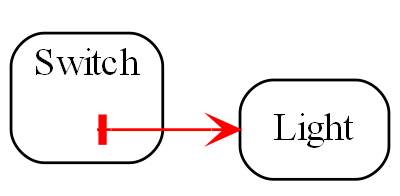

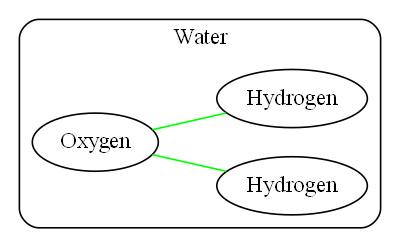

Requirement: Make a switch control a light.

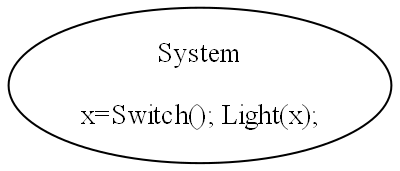

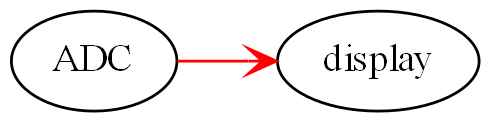

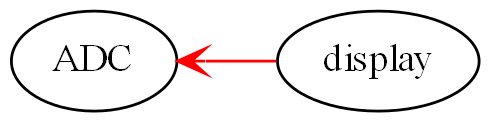

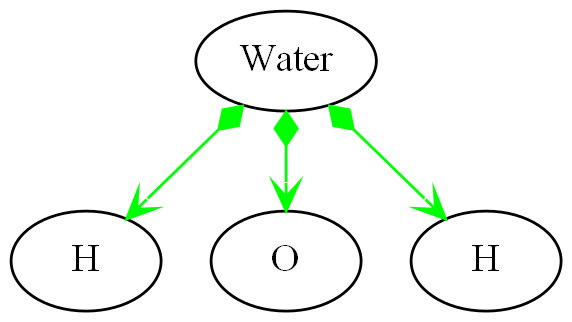

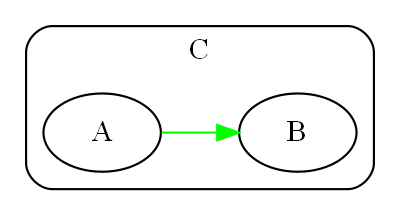

The diagram above is not documentation. Nor is it a high-level architectural view of the solution. It is the solution. It is the implementation for the user story abstraction. It contains all the detail needed for an executable user story. The diagram is literally compiled. Let’s do that now by hand:

Application layer

Here is the diagram converted to text form:

var system = new Switch().WireTo(new Light());

system.Run();Given this code, it is not difficult for any programmer to write the necessary Switch and Light domain abstractions as classes, together with a Dataflow programming paradigm abstraction as an interface to make that code execute. We will do that in a moment.

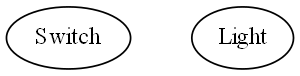

In conventional code, we would likely have broken the system up into two modules, one for the switch and one for the light. The switch might directly call a method in the interface for the light, or vice versa. In ALA you can’t do that. The concepts of Switch and Light, already handed to us as abstractions in the words of the requirements, must remain as abstractions. At design-time, they can’t know about one another and they can’t know about the specific system. As abstractions they can’t communicate with one another. But at run-time, instances of them, after having been wired together, can communicate with one another.

The abstract concept of dataflow is invented to allow instances of Switch and Light and many other things to communicate with data at run-time. Dataflow is an even more abstract abstraction. It resides in the programming paradigms layer. It is a stream of data without end. It is not a specific interface of either Switch or Light. It allows Switches and Lights to be wired arbitrarily to anything using the same dataflow concept.

The Switch-Light system is also an abstraction, albeit a more specific one than Switch or Light. Multiple instances of it could be used in a building. Its purpose is to know about the system comprising a light and a switch connected together. It knows about Switch, Light and Dataflow as abstract concepts but doesn’t know anything about their implementation details.

The system diagram is a direct, formal restatement of the requirements. So the diagram is three things in one: The formal statement of requirements, the high level architecture, and the executable. One source of truth for all three. Conventional software engineering usually has three different documents for these, which then must be kept synced.

When the program is this small, it looks like we just created four abstractions when two modules would have done. However, by creating an application layer abstraction to represent the system level knowledge, and a programming paradigm layer abstraction to represent the concept of Dataflow, we make several powerful improvements to the code that are important as the application scales up in size:

-

There is now an explicit place that cohesively implements the requirement instead of having the requirement’s implementation distributed in an obviscated way inside Switch and Light. What is loose coupling between modules in conventional code becomes cohesive code completely contained inside a higher (more specific) abstraction in ALA. Changes to the requirements are made in this one place.

-

The code inside all the abstractions: System, Switch, Light and Dataflow are zero-coupled with one another. Every abstraction’s internal code is readable in isolation.

-

Being abstractions, Switch and Light are reusable. They are reusable in the same application or in other applications in the domain. Dataflow is even more reusable.

-

Programming paradigms provide the meaning of connecting together two instances of domain abstractions. We can use multiple different programming paradigms in the one top level design. ALA is said to be polyglot in programming paradigms. This makes it very expressive.

-

Switch and light do not need a shared understanding for the interpretation of data (a shared language). We think that shared languages are necessary because people need a shared language to communicate. So we tend to create modules that work in the same way. These are sometimes referred to as contracts. If Switch and Light were modules, they would need to agree on something like true means on and false means off. In ALA, this type of coupling is also gone. The knowledge about interpretation of data is wholly contained inside the user story abstraction in the higher layer. This may seem trivial in this simple case, but it becomes enormously significant in larger programs and distributed programs.

-

If the instances of Switch and Light are deployed to different physical locations, the Switch-Light system is still a single cohesive abstraction describing that system. Normally, conventional programs are decomposed first into modules across physical locations, but in ALA you always compose first by user stories, regardless of the physical deployment of its constituent domain abstraction instances. This will also be highly significant as the system scales up in size.

-

Switch and Light are easily testable with unit tests.

-

Testing the system abstraction is exactly acceptance testing. In ALA, you always test with dependencies in place, but you mock the ports. Just as you would not mock out a dependency such as squareroot, you do not mock any dependencies in ALA, because all dependencies are knowledge dependencies. Testing the system abstraction means testing that an instance of the switch and Light system works.

-

The writing of the Switch and Light abstractions can be handed off to different individuals or teams because, as abstractions, they know nothing about each other, and they know nothing about the System. In fact abstractions will be better quality if the teams do not collaborate with each other so that the abstractions themselves do not collaborate. (Corollary of Conway’s law.)

-

In terms of methodology, instead of decomposing the Switch-Light system into parts, we compose it from abstractions. This point may seem subtle, but it is profoundly important. The conventional divide and conquer methodology of splitting a system into smaller but collaborating parts until the parts are small enough to implement is arguably the prevalent approach in traditional software engineering. It causes a lot of damage. It result in parts that are more specific than the system (can’t be reused for anything) and, more significantly, inter-collaborating parts that have implicit knowledge of each other. The meme divide and conquer should be replaced with invent and compose.

Here is skeleton code of the two domain abstractions.

Domain abstractions layer

// domain abstraction

class Switch

{

// port

private IDataFlow<bool> output;

// called from internal code (not shown) when it detects a hardware change

private void SwitchChange(bool newState)

{

output.Send(newState);

}

}// domain abstraction

class Light : IDataFlow<bool> // port

{

IDataFlow<bool>.Send(bool data)

{

if (data) // turn on the light

else // turn off the light

}

}Each of these abstractions implements a port, which allows instances of them to be wired using the programming paradigm, DataFlow.

You may be wondering why the Switch’s output port is private. That’s because we wan’t the public interface of Switch to just be the 'configuration interface' used by the system abstraction when it instantiates a Switch. The WireTo method is designed to be able to wire the private port.

Here is our programming paradigms layer which contains the DataFlow abstraction:

Programming Paradigms layer

// Programming paradigm: DataFlow

interface IDataFlow<T>

{

void Send(T data);

}In ALA, we frequently use the wiring pattern, as depicted by the diagram in Figure 4, which consists of instantiating domain abstractions and wiring them together by ports that use an even more abstract interface. The wiring pattern is quite ubiquitous, and therefore comes from a foundation layer that resides below the Programming Paradigms layer:

Foundation layer

public static object WireTo(this object a, object b)

{

// using reflection:

// 1. Find a private field in object "a" that matches in type an interface implemented by object "b".

// 2. Cast and assign object "b" to that field in object a.

// 3. Return object "a".

}Note: A basic implementation of WireTo is listed in Chapter Two. You can get the source for a WireTo extension method from one of the Github repositories for the example projects in several following chapters.

Note: ALA does not require the use of reflection. I like to use reflection because it allows me to use an extension method to get a WireTo operator implemented for all domain abstractions. It allows me to make specifying the port name optional. It also allows me to make the port fields in the class private so they do not look like part of the public configuration interface to the layer above. And it spares me from writing setter methods for every port.

Instead of using a WireTo function, and if you are generating wiring code from the diagram automatically using a tool, and you make the port fields public, you could generate wiring code like the following:

new Switch().output = (IDataFlow<bool>) new Light();Now that we know how to express requirements by composition of domain abstractions, let’s quickly demonstrate the ease of maintenance of our application:

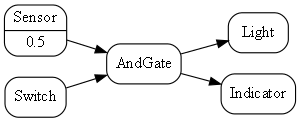

Requirement: Add a sensor to turn on the light when the switch is on and it is dark. And give a feedback indication:

(For these small examples, we will manually generate code from the diagrams.)

Here is the diagram converted to text form:

var andGate = new AndGate();

new Main

.WireTo(new Switch()

.WireTo(andGate

.WireTo(new Light())

.WireTo(new Indicator())))

.WireTo(new Sensor(threshold:0.5)

.WireTo(andGate))

.Run();We just invented some new domain abstractions: AndGate and Sensor, again directly implied by the requirements.

One of the domain abstraction instances has a configuration of 0.5. This is a threshold for expressing the requirement clause "is dark".

Notice that this application is easier to write in this way than it would be in conventional C code. This is because the programming paradigm we are using, dataflow, suits the expression of these requirements. Most C code to do even such a simple requirement as this would likely already be messy in the way it handles run-time execution.

The astute reader will notice that the AndGate can’t implement IDataFlow<bool> twice for its two inputs. In later projects, we will show how we work around this completely unnecessary constraint of most languages.

You may also notice that the fanout from the output of the AndGate to both the Light and the Indicator won’t work because an output port can be wired only once. We show how this implementation problem is solved in later projects as well.

Now that we have some reusable domain abstractions and programming paradigms, let’s quickly write another trivial application:

Requirement: Turn on the light from a tick item in the Tools menu of a PC application, and give an indication in the status bar when the light is on.

Here is the diagram converted to text form:

var indicator = new Indicator( {"Light is off", "Light is On"} );

new MainWindow()

.WireTo(new Menu())

.WireTo(new Menu("Tools")

.WireTo(new TickBox(label:"Light")

.WireTo(new Light())

.WireTo(indicator)

)

)

)

.WireTo(new StatusBar()

.WireTo(indicator) // put the indicator on the UI

)

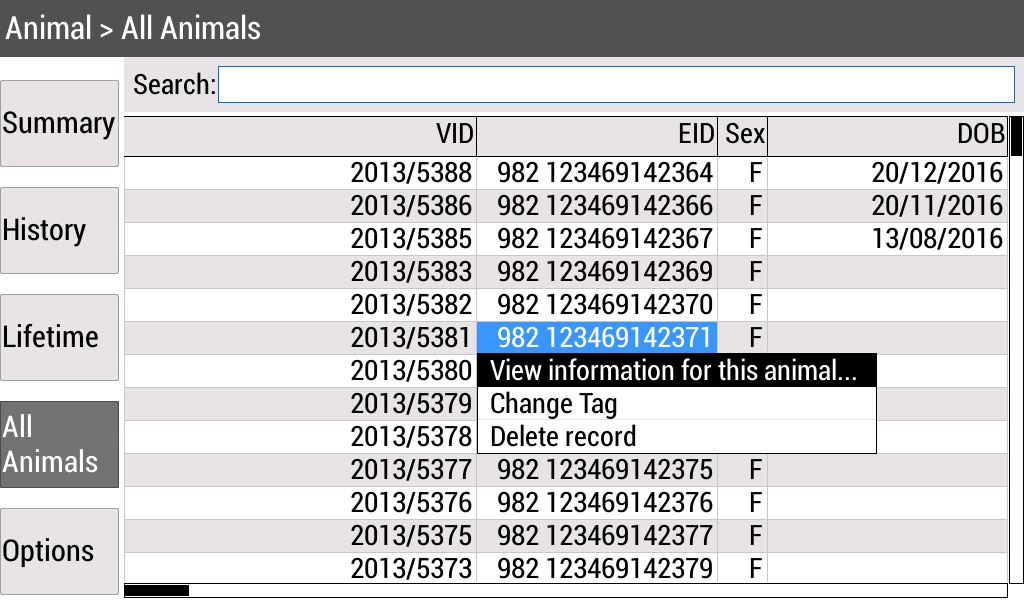

.Run();Here we are introducing some graphical UI, so we invented another programming paradigm for "UI layout". It is used between all the UI elements: MainWindow, Menu, Tickbox, StatusBar, Indicator. Wiring things together using that programming paradigm means things are arranged inside things on the UI.

Notice how ALA is polyglot in programming paradigms. We use two programming paradigms, UI layout and dataflow, to express the user story. Notice also that we don’t separate UI from business logic and data models as we do in conventional architectural layering patterns. These are highly cohesive things from the perspective of user stories and ought to be kept together. Instead, we separate the implementations of the domain abstractions. It is still easy to swap out, for example, the UI implementation. The diagram above could be implemented as a web application or a desktop application by swapping between two sets of UI domain abstraction implementations.

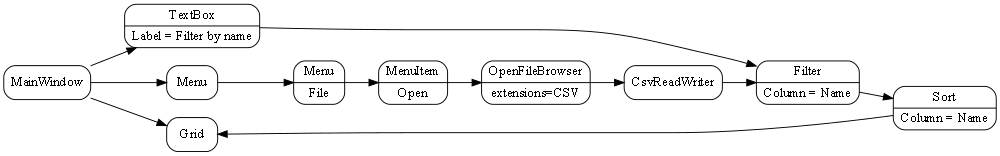

Let’s do an application to browse for and display a (dynamic content) CSV file on a grid, filtered by a user specified name, and sorted by names. The CSV file has headings that will display in the grid.

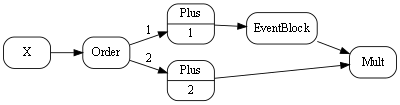

The wiring between the MeniItem and the OpenFileBrowser uses an Event-driven programming paradigm.

The wiring between the CSVReadWriter, Filter, Sort and Grid uses a programming paradigm that allows dynamic row and columns of data to flow. The Grid abstraction is able to pull rows of data as needed.

Here is the diagram converted to text form:

var grid = new Grid();

var csvReaderWriter = new CsvReaderWriter

var filter = new Filter() {Column = "Name"};

new MainWindow()

.WireTo(new Menu()

.WireIn(new Menu("File"))

.WireIn(new MenuItem("Open"))

.WireIn(new OpenFileBrowser(extensions = {"csv"} ))

.WireIn(csvReaderWriter)

.WireIn(filter)

.WireIn(new Sort() { Column="Name" })

.WireIn(grid) { Column="Name" }

)

.WireTo(new TextBox(Label="Filter by name")

.WireTo(filter)

)

.WireTo(grid)

.Run();Note: if you already have monads in your programming library for things like sorting and filtering (such as LINQ or reactive extensions), then instead of creating new domain abstractons for Filter and Sort, you could just create a domain abstraction called _query_ and use LINQ or reactive extensions code to configure the query instance.

Note: WireTo() returns its first operand (this). WireIn() is the same as WireTo() except that it returns its second operand. These operators support the fluent coding style being used in this hand compiled code so that we don't have to think of names for every instance of an abstraction.

The methodology we have been following is that you write the application code (diagram) first (or part of it), just focusing on expressing the requirements. This causes you to invent domain abstractions and programming paradigms. Then you come up with an execution model that will make the programming paradigms execute. For example, the two interfaces listed below might be what you would come up with for the Event and PullTable programming paradigms:

// Programming paradigm: Event driven

interface IEvent

{

void Execute();

}// Programming paradigm: TableDataFlow

interface IPullTable : IEnumerable<List<string><T>>

{

List<string> GetHeaderLabels();

}This interface handles dynamic data (types unknown at compile-time) by crudely using strings. In a later project we will show a more sophisticated IPullTable interface that uses static typing for fields known at compile-time and an ExpandoObject for dynamic fields. We will also do a push version.

Both Filter and Sort will have both input and output ports of type IPullTable. The List in the IEnumerable represents one row of the table.

Don’t worry, we won’t be creating new programming paradigm abstractions at this rate for long. In fact we already have most of the ones we will use in all our example projects in this book.

Notice how in the above examples, we have used software engineering patterns we already know about, just in a different way. There is DSL (Domain Specific Language), Dependency Injection (which is what the WireTo operator does), Event driven programming, XAML-like UI layout (without the XML), RX (Reactive programming), monad-like wiring up, and the fluent style.

Notice how the application diagram in each case is both a direct representation of the requirements and the executable. It is executable because unlike conventional higher level or architecture diagrams, ALA application diagrams do not leave out details from the requirements. They are a complete expression of them. Instead, they leave out all details of implementation, which are taken care of by domain abstractions and programming paradigm abstractions.

To the extent that requirements are cohesive, so the code that expresses them should be. For example, we do not try to separate the specific UI, I/O, business logic, persistent data etc into different modules because they are highly cohesive for a given requirement. Most other architectural patterns, such as MVC, do separate in this way, which creates coupling. Instead we reduce the problem in a different way - through the use of domain abstractions which provide reusable aspects of implementation. All knowledge and details from the requirements end up in the application layer, but that’s all that goes there.

Note: A graph-like structure is showing up in these small applications because each requirement in itself contains a graph of connections. ALA embraces this and makes it explicit, which is why the requirements are best expressed as diagrams. In conventional code, the graph structure is still there, but it is typically obfuscated as many intermodule relationships, making it hard to see. Worse still, the natural cycles in the graph of relationships in the requirements, would cause circular dependencies among conventional modules. Conventionally, some of these dependencies are broken by using pulling data half the time rather than pushing, or worse still, using indirections such as the observer pattern. These removals of dependencies don’t make the relationships themselves go away, they just make them harder to see. In ALA the inter-module relationships go away and become connection code purely contaned inside a single module.

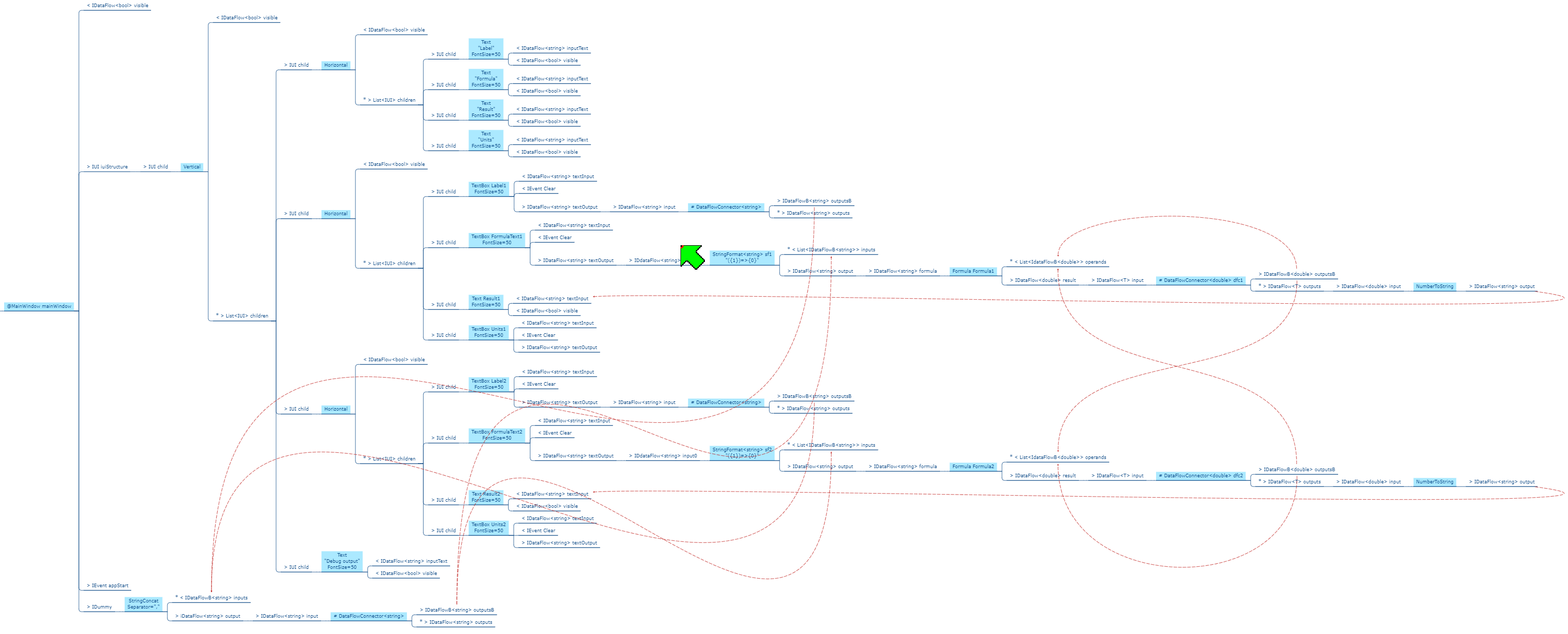

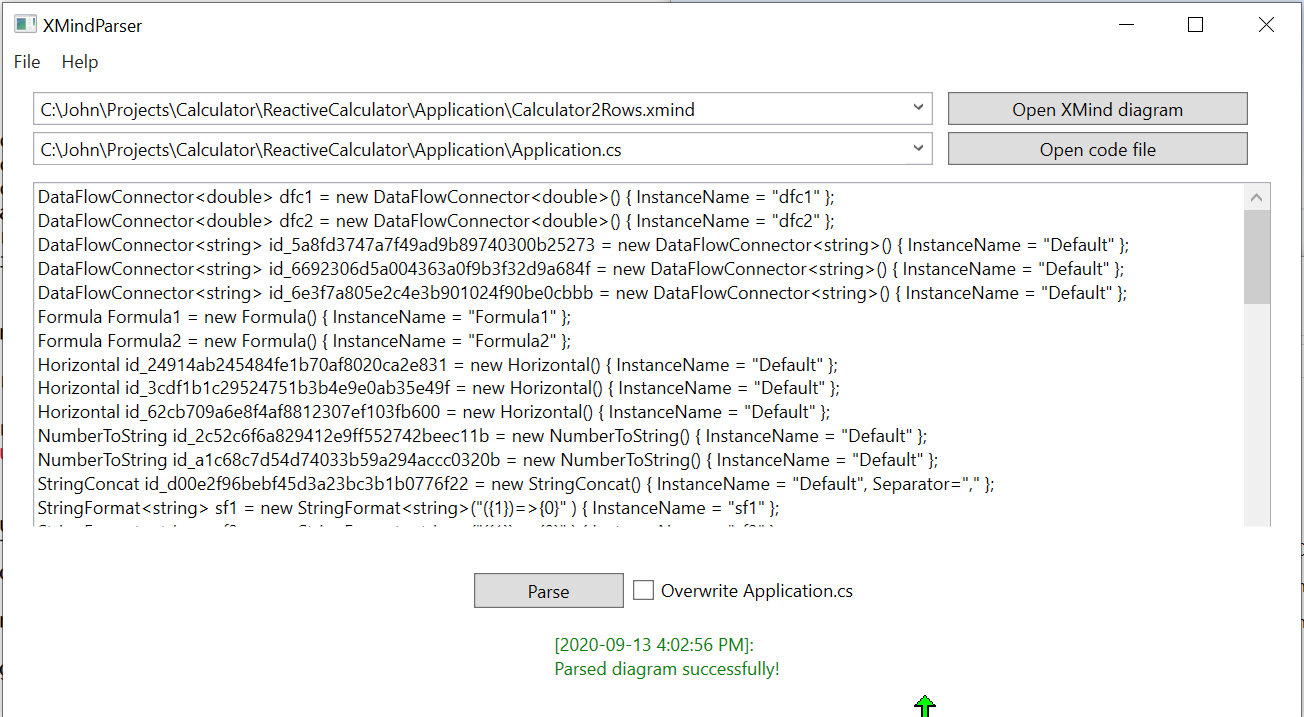

Although the fluent style is a nice way to hand-compile these small diagrams, code like this with indenting and nested brackets does not scale up well for large diagrams. (It is still better than the tangled web of dependencies they would form in conventional modular code though.) But we can do better. We will not be hand-writing code like this for large applications - we will automatically generate the code from the diagrams.

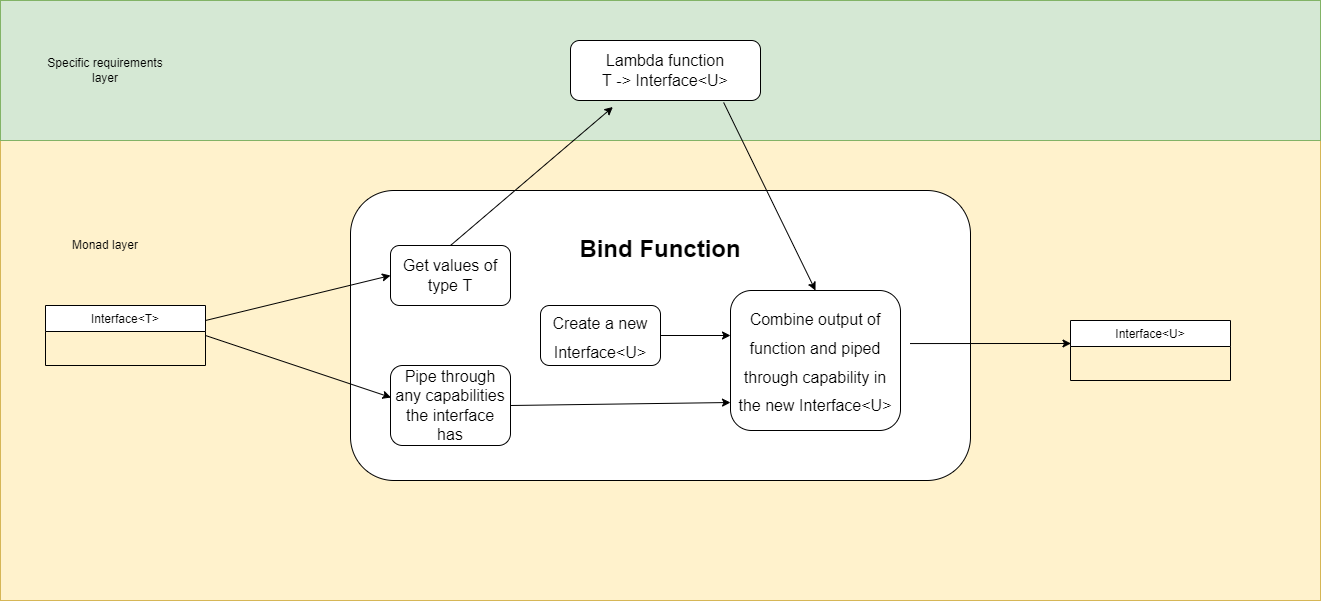

Finally, the wiring pattern used in the examples above is only one possible way of meeting the fundamental ALA constraints. For example, ALA can also be applied to functional programming using monads as domain abstractions. But we will use explicit objects with explicit classes and the wiring pattern shown above in most of our examples. The wiring itself is implemented by a field in the objects, but that field is immutable. ALA may appear to be synonymous with this wiring pattern, but actually ALA is just the three fundamental constraints stated at the beginning of this introduction.

This online book is a work in progress. ALA is a research in progress. Please don’t hesitate to provide feedback to the e-mail address given at the top or in the comments facility at the end.

I would like to acknowledge the help of Roopak Sinha at AUT (Auckland University of Technology) for his significant academic contributions and ideas for ALA, and the contributions of many students who have implemented ALA projects, and helped refine the ALA methodology.

1. Chapter one - What problem does it solve?

If you have already experienced difficult to maintain code, big balls of mud, spaghetti code, or the CRAP cycle (Create, Repair, Abandon, rePeat), you can probably skip this chapter.

However, the example at the end is pretty cool - it starts with the type of typical C code that I see most students write, and then refactors, it step by step, into ALA compliant code - you should take a look at that.

The problem that ALA solves can be seen as any one of the following perspectives.

1.1. The Big Ball of Mud

Brian Foote and Joseph Yoder popularized this term in their 1997 paper. It describes the default architecture when no other architecture is used. A similar term is spaghetti code. I think it describes the architecture of most software even when so-called architectural styles, such as layering, are used.

ALA is an in-the-large strategy to organise code. It provides the constraints needed for the code structure to not degenerate into a big ball of mud. As the software life cycle continues, retaining the organisation becomes easier rather than harder.

1.2. Simplify down the overwhelming set of architectural styles, patterns, and principles

There are many traditional architectural styles, patterns, principles and paradigms. The problem of structuring software code to meet quality attributes involves mastering an overwhelming number of them. Here are some examples:

-

loose coupling and high cohesion, information hiding, separation of concerns

-

DSLs, aspects, model driven, MVC, inversion of control, functional programming, UML Class diagrams, sequence diagrams, activity diagram, state diagram.

-

Views, Styles, Patterns, Tactics, Models, ADL’s, ADD, SAAM, ATAM, 4+1, Decomposition

-

CBD/CBSE, Components & Connectors, Pipes & Filters, n-tier, Client/Server, Plug-in, Microservices, Monolithic, Contracts, Message Bus

-

Modules, Components, Layers, Classes, Objects, Abstraction, Granularity

-

Semantic coupling, Syntax coupling, Temporal coupling, existence coupling, Good and bad dependencies, Collaboration

-

Interfaces, Polymorphism, Encapsulation, Contracts, Interface Intent

-

Execution models, Event-Driven, Multithreaded, Mainloop, Data-driven, Concurrency, Reactor pattern, Race condition, Deadlock, Priority Inversion, Reactive

-

Principles: SRP, OCP, LSP, ISP, DIP; MVC, MVP, etc

-

Design Patterns: GOF patterns, GRASP patterns, Layers, Whole-Part, Observer, Strategy, Factory method, Wrapper, Composite, Decorator, Dependency Injection, Callbacks, Chain of Responsibility, etc

-

Expressiveness, Fluency, DDD, Coding guidelines, Comments

-

Programming Paradigms, Imperative, Declarative, Object oriented design, Activity-flow, Work-flow, Dataflow, Function blocks, Synchronous, State machine, GUI layout, Navigation-flow, Data Schema, Functional, Immutable objects, FRP, RX, Monads, AOP, Polyglot-Programming Paradigms

-

Messaging: Push, Pull, Synchronous, Asynchronous, Shared memory, Signals & Slots

-

Memory management, Heap, Persistence, Databases, ORMs

-

Waterfall, Agile, Use cases, User stories, TDD, BDD, MDSD

Mastering all these topics takes a lifetime. Even if you can, juggling them all and being able to use the right ones at the right time is extremely taxing on any developer. Add to that the mastering of technologies and tools, keeping to agile sprint deadlines, and commitment to your team and management, it is an almost impossible task. 'Working code' tends to be what the team is judged on, especially by project managers or product owners who have no direct interest in architecture or even the Definition of Done. They don’t want to know about the rather negative sounding term, "technical debt".

Most texts will tell you that these are all tools and that you need to use the right tools for each job. It all depends, they say, on the particular system, and its particular functional and non-functional requirements. In most cases they end up being used in an ad-hoc manner that doesn’t work well. In some cases their use is actually harmful.

Being a pre-worked recipe of the aforementioned styles and patterns, ALA probably contains no truly novel ideas. Every aspect of what ALA does can be found already done by someone. However the combination that ALA uses is as far as I know unique.

Some ingredients from the above list are accentuated in importance more than you might expect (such as abstraction). Some are relatively neutral. The biggest surprise for me during the conception process of ALA was that some well-established software engineering memes seemed to be in conflict. Eventually I concluded that they were in-fact bad (such as UML class diagrams). We will discuss these in detail in subsequent chapters.

Like any good recipe, the ingredients work together to form a whole that is greater than the sum of the parts. The resulting code quality is significantly ahead of what the individual memes do by themselves. It continues to surprise me just how effective, and enjoyable, the simplified view is.

1.3. An optimal solution for quality attributes

ALA is an optimal solution for these quality atributes:

-

Readability

-

Complexity

-

Maintainability

-

Testability

-

Understandability

-

Modifiability

-

Extensibility

-

Dependability

-

Reusability

It is independent of any specific domain, so it is a general reference architecture. By optimal, I mean that it makes these qualities as good as they can be.

If other non-functional requirements are also important, ALA provides a good starting point.

-

Performance

-

Availability

-

Scalability

-

Portability

-

Distributability

-

Security

-

Usability

-

Fault-tolerance

Even if the ALA structure must be compromised in places for other qualities, it is still better to start with these quality attributes optimised and deviate from them as necessary. As it happens, the maintainability resulting from ALA frequently makes other quality attributes easier to achieve as well. For example, in an ALA application it is often easy to make performance optimizations in the execution model that don’t affect the application code. For example, an application first written to run on a single processor can more easily be distributed to multiple processors. Or, you can port an application by swapping out domain abstractions without changing the application code.

1.3.1. Readability

Modules don’t necessarily make pieces of code that are readable in isolation.

ALA code is readable, not because of style, convention, comments or documentation, but because any one piece of code appears to you as a separate uncoupled little program that is readable in complete isolation.

1.3.2. Complexity

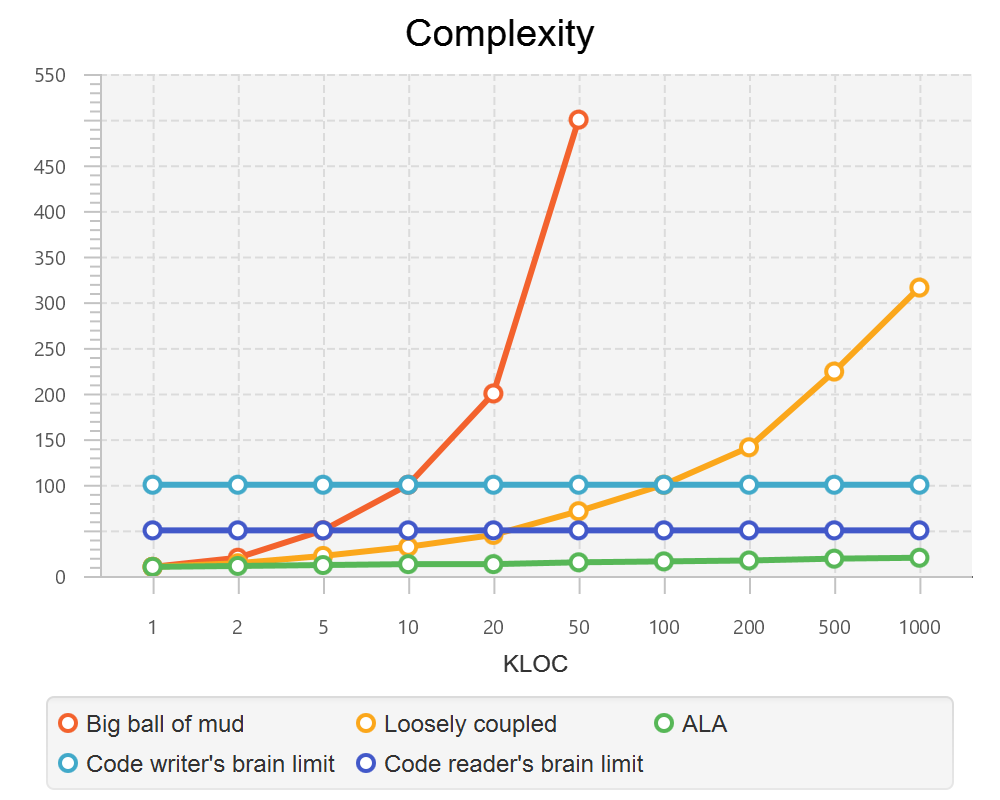

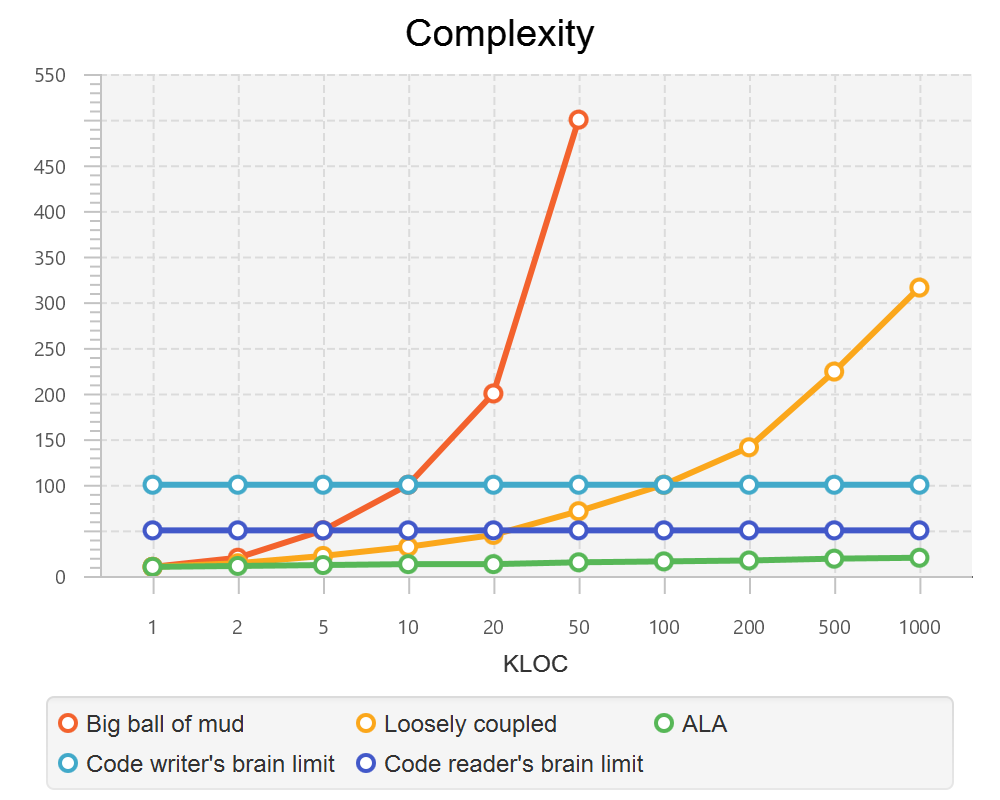

There is a meme in the software industry that says that the complexity of software must be some function of its size. This need not be so. With proper use of abstraction it is possible to have complexity that is constant regardless of program size. ALA makes use of this.

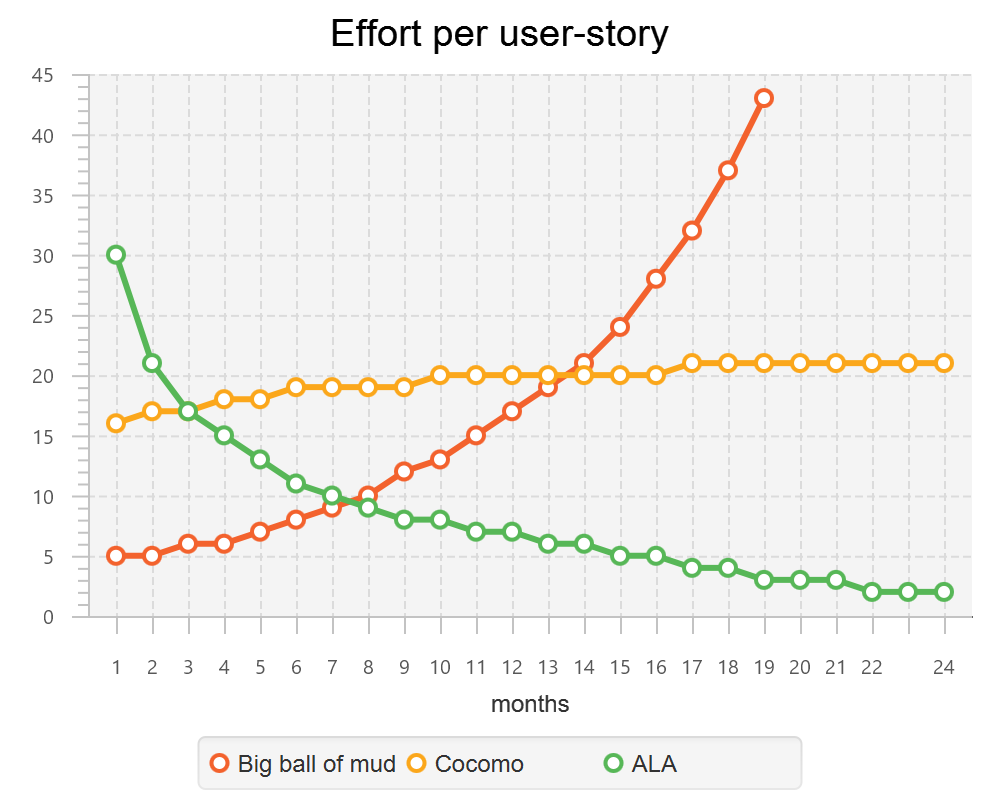

This is a qualitative graph comparing the complexity of an ALA application with that of a big ball of mud and an average loosely coupled application. This is further explained in chapter seven here.

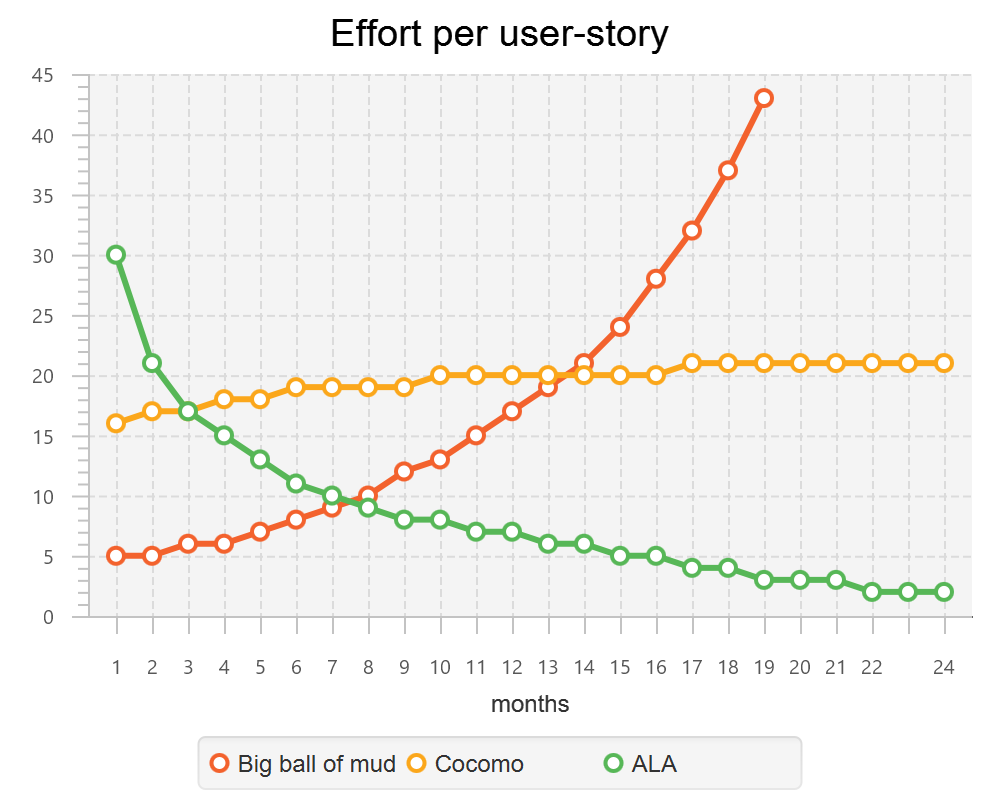

1.3.3. Maintainability

The maintainability effort over time should qualitatively follow the green curve in the graph below because as software artefacts are written, their reuse should reduce the effort required for other user stories. Product owners seem to have an innate sense that we manage to organise our code such that this happens. That is why they get so frustrated when things seem to take longer and longer over time, and they often ask us "haven’t we done this before". In practice, too often we follow the red curve. Maintenance eventually gets so difficult that we want to throw it away and start again. We reason we can do better. My experience is that we don’t do better when we rewrite. We just create another mess. It is just a psychological bias on the part of the developer caused by a combination of a) the Dunning Kruger effect and b) the fact that it is easier to read our own recently written code than someone else’s.

If we apply all the well known styles and principles, the best we seem to be typically manage is the orange curve, which comes from the COCOMO models, and which still has maintenance effort continuously increasing.

When we did an experimental re-write of a legacy application using ALA, and measured its maintainability attribute, it comes out as improving over time by several different measures.

ALA is based on the theoretical architectural constraints needed to follow the green curve.

1.3.4. Testability

In ALA all code is testable. ALA makes it clear when to mock and when to test with dependencies in place. All dependencies are left in place, because all dependencies are design-time or knowledge dependencies.

Therefore, when testing the application layer abstractions, they are tested with their domain abstraction dependencies. In other words, testing the application is acceptance testing.

Testing domain abstractions is easy with units tests because abstractions are zero-coupled. Mocks objects are wired to ports.

1.4. Structure hidden inside the modules

The problem in most large code bases is that the system structure, the structure at the largest scale, is not explicit. It is distributed inside the modules themselves. Collaboration between modules is implicitly hidden inside them. Finding this structure, even for a single user story can be time consuming. I have often spent a whole day doing that, doing countless all-files searches following function calls or method calls of the user story through many modules just to end up changing one line of code in the end. Many developers I have spoken to can identify with this experience.

I call this situation SMITA (Structure Missing in the Action). The internal structure is sometimes drawn as a model - high-level documentation of the hidden structure. But such models are a secondary source of truth.

It can get a lot worse as the system gets larger. In a seemingly bizarre twist, the more loosely coupled you make the elements, the harder it gets to trace a user story because of the indirections. Some people conclude that loose coupling and being able to trace through a user-story are naturally in conflict. They are actually not in conflict.

ALA is an architecture that has full indirection at runtime while at the same time having no indirection at design-time because the system is implemented all in one cohesive plave.

1.5. The CRAP cycle

Typical bright young engineers come out of university knowing C++ or Java (or other C*, low-level, imperative, language that mimics the silicon), and are confident that, because the language is Turing-complete, if they string together enough statements, they can accomplish anything. At first they can. There hardly seems a need for a software architect to be involved. And besides, we are told that a design can emerge through incremental refactoring.

As the program gets larger, things get a little more complicated, but the young developer’s brain is still up to the task, not realizing he has already surpassed anyone else’s ability to read his code. He is still able to get more features working. One day parts of the code 'transition' to complex. It becomes somewhere you don’t want to go. On the Cynfin diagram, it has moved from the complicated quadrant to the complex quadrant. And now it is trapped there. It is too complex for refactoring.

The incremental effort to maintain starts to eat away and eventually exceed the incremental increase in value. This now negative return causes the codebase itself to eventually lose value, until it is no longer an asset to the business.

It has transitioned to chaos. It will be abandoned. When a new bright young engineer who knows C* arrives, he looks at the legacy codebase and is convinced that he can do better. And the cycle repeats. This is the CRAP cycle (Create, Repair, Abandon, rePlace). ALA is the only method I know that can prevent the CRAP cycle.

1.5.1. A short history of ALA

From early on in my career, I experienced the CRAP cycle, not so much rewriting applications, but trying to avoid the mess when writing new ones. When starting from a blank piece of paper, I would research all the architectural styles and principles. I would come across things like 'loose coupling', and I remember asking myself, yes but how does one accomplish that? Each time I would still fail.

I started searching for a pre-worked, generally applicable, 'template architecture' that would tell me what the organisation of the code should look like for any program. I searched for such a thing many times over a long career and never found one. Some would say that this is because the highest level structure depends on project specific requirements.

Finally, near the end of my career, I have that template meta-structure that’s applicable to all programs. The turning point was when I noticed two (accidental) successes in parts of two projects. These successes were only noticed years later, 15 years in one case and 5 years in the other. They had each undergone considerable maintenance during that time. But their simplicity had never degraded and their maintenance had always been straightforward. It was like being at a rubbish dump and noticing two pieces of metal that had never rusted. "That’s weird", you think to yourself. "What is going on here?"

One of them had the same functionality as another piece of software that I had written years earlier. That software was the worst I had ever written. It was truly a big ball of mud, and maintenance had become completely impossible, causing the whole product to be abandoned. So it wasn’t what the software did that made the difference between good and bad. It was how it was done.

Analysing the common properties of those two code bases, gave clues that eventually resulted in a theoretical understanding of how to deal with complex systems. This meta-structure is what I now call Abstraction Layered Architecture.

Subsequently, I ran some experiments to see if the maintainability and non-complexity could be predictably reproduced. These experiments, which have worked spectacularly well so far, are discussed as a project at the end of each chapter.

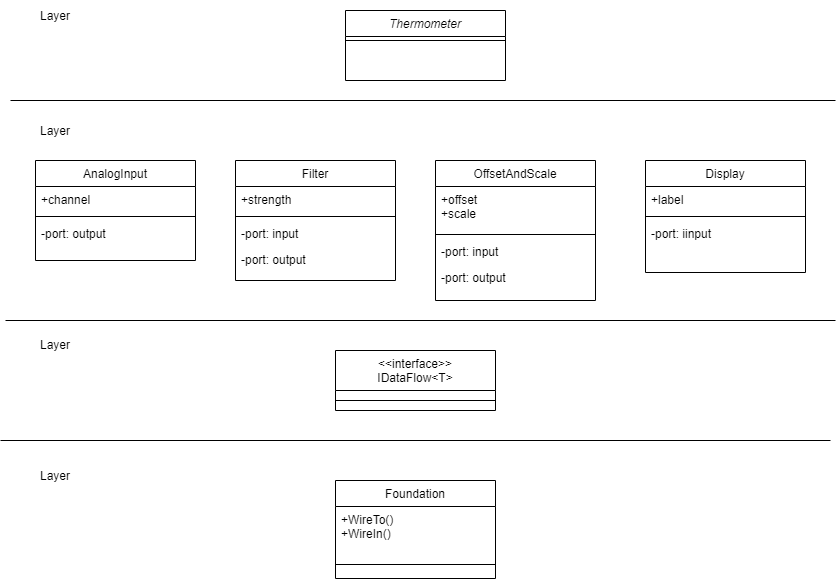

1.6. Example project - Thermometer

In this example project, we will first do conventional C code using functions, then refactor it into abstraction layers, and finally improve on that using classes.

Functions have an execution model we are already familiar with, making this first example easier to understand. However, keep in mind that, for whole programs, this execution model does not usually make a good programming paradigm. An emergent property of ALA is its support of multiple and diverse programming paradigms including your own. We do this to improve expressiveness of the requirements.

Nevertheless, functional composition is a passable programming paradigm for a tiny, dedicated embedded program in a micro-controller such as our thermometer. Let’s have a look at some typical code:

1.6.1. Bad code

#define BATCHSIZE 100 #include "configurations.h"

void main()

{

int temperatures[BATCHSIZE];

ConfigureTemperaturesAdc();

while (1)

{

GetTemperaturesFromAdc(temperatures); // gets a batch of readings at a time

ProcessTemperatures(tempertures)

}

} void ProcessTemperatures(int adcs[])

{

float temperature;

for (i = 0; i<BATCHSIZE; i++) {

temperature = (adcs[i] + 4) * 8.3; // convert adc to celcius

temperature = SmoothTemperature(temperature);

ResampleTemperature(temperature);

}

} void ResampleTemperature(float temperature)

{

static int counter = 0;

counter++;

if (counter==15)

{

DisplayTemperature(temperature);

counter = 0;

}

} // smooth the reading before displaying

float SmoothTemperature(float temperature)

{

static filtered = 0;

filtered = filtered*9/10 + temperature/10;

return filtered;

} #include "configurations.h"

void ConfigureTemperaturesAdc()

{

// configure ADC channel 2 to do DMA BATCHSIZE values at a time

}

float GetTemperaturesFromAdc(int temperatures[])

{

for (i = 0; i<BATCHSIZE; i++) {

temperature[i] = ReadAdcChannel(2); // pseudocode here for the adc read

}

}At first this code wont look that bad, but that’s only because the whole program is so small. It looks modular, but you still have to read all of it to understand any part of it. That’s possible for small programs, but of course that strategy won’t scale up.

As we are taught to do, different responsibilities of the thermometer implementation have been separated out into smaller pieces with smaller responsibilities, although ProcessTemperatures appears to have three responsibilities. The problem is that all the pieces are in some way collaborating to make a thermometer. They are all coupled in some way, both explicitly or implicitly. That’s why we have to read all the code to understand the thermometer. Scale this up to 5000 lines of code, and we will have a big mess.

We are going to refactor the program using the ALA strategy:

-

every piece of knowledge about 'being a thermometer' will be in one function

-

that 'Thermometer' function will be at the top

-

that function will do no real work itself

-

how to do more abstract things will be put into other functions

-

those functions will not know anything about temperature or thermometer

-

The top layer function will compose the abstract functions it needs to build a thermometer

1.6.2. Toward ALA code

#define BATCHSIZE 100

void main()

{

int adcs[DMABATCHSIZE];

float temperatureCelcius;

float smoothedTemperatureCelcius;

while (1)

{

GetAdcReadings(adcs, 2, DMABATCHSIZE); // channel=2

for (i = 0; i<BATCHSIZE; i++) {

temperatureInCelcius = OffsetAndScale(adc, offset=4, slope=8.3);

smoothedTemperatureCelcius = Filter(temperatureCelcius, 10);

if (SampleEvery(15))

{

Display(FloatToString(smoothedTemperatureCelcius, "#.#"));

);

}

}

} // offset and scale a value

void OffsetAndScale(float data, float offset, float scale)

{

return (data + offset) * scale;

} // IIR 1st order filter, higher filterstrength is lower cutoff frequency

float Filter(float input, int strength)

{

static float filtered = 0.0;

filtered = (filtered * (strength-1) + input) / strength

return filtered;

} // Returns true every n times it is called

bool SampleEvery(int n)

{

static counter = 0;

counter++;

if (counter>=n)

{

counter = 0;

rv = true;

}

else

{

rv = false;

}

return rv;

}The code now begins to be arranged into two abstraction layers, the application layer and the domain abstractions layer. The application is now the only function that knows about being a thermometer. (It is still doing some logic work - the 'for loop' and 'if statement', which we will address soon.)

All the other functions are now more abstract - they know nothing about thermometers - GetAdcReadings, OffsetAndScale, SampleEvery, Filter, FloatToString, and Display. Notice that the word 'thermometer' has been removed from their names, and none of them contain constants or any other references that are to do with a thermometer or temperature.

These abstract functions give you six things:

-

Abstract functions are way easier to learn and remember what they do

-

Abstract functions give design-time encapsulation i.e. zero coupling.

-

Abstract functions can be understood by themselves

-

Abstract function interfaces are way more stable - as stable as the concept of the abstraction itself

-

Abstract functions are reusable

-

Abstract functions are testable

-

As a consequence of 1., the application function can also now be understood by itself

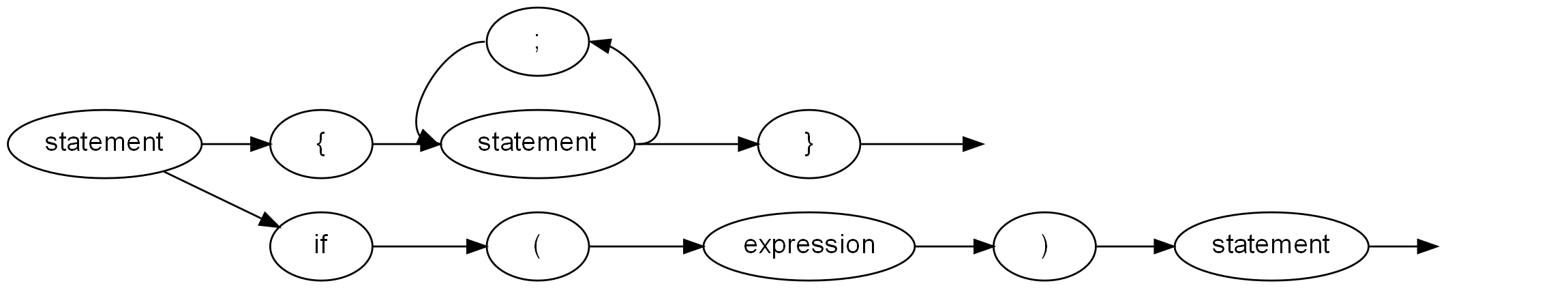

Now let’s go one more step and create an abstraction to do what that for loop does: This may seem like a retrograde step, but we need to understand this mechanism to move to our final goal of expressing the requirements through pure composition of abstractions. We want to move the 'for loop' out into its own abstraction, but we don’t want to move the code that’s inside it. We accomplish this by putting the code inside it into another function and passing that function to the for loop function:

1.6.3. Further toward ALA code

#define DMABATCHSIZE 100

void main()

{

int adcs[DMABATCHSIZE];

float temperatureCelcius;

float smoothedTemperatureCelcius;

ConfigureAdc(2, DMABATCHSIZE)

while (1)

{

GetAdcReadings(adcs, 2, DMABATCHSIZE); // channel=2

foreach(adcs, func1);

}

}

void func1(float adc)

{

temperatureInCelcius = OffsetAndScale(adc, offset=4, slope=8.3);

smoothedTemperatureCelcius = Filter(temperatureCelcius, 10);

if (SampleEvery(15))

{

Display(FloatToString(smoothedTemperatureCelcius, "#.#"));

);

} void foreach(int values[], void (*f)(int))

{

for (i = 0; i<sizeof(values)/sizeof(*values); i++) {

(*f)(values[i]);

}

}"func1" is not an abstraction - you cannot give it a name and learn a simple concept of what it does. That’s why I gave it a non-descript name. The content of func1 is cohesively just part of the thermometer application. The name func1 only serves as a symbolic connection within cohesive code - nothing more than a wiring between two points in the program. In this case func1 is immediately below where it is used in the same small file. But as a program grows, these symbolic wirings are always hard to follow. You would need to resort to text searches to find these connections. These types of connections can be numerous and unstructured in larger programs, and the best way to deal with them is diagrams. A line on a diagram is like a symbolic connection between two points, but it’s anonymous and easy to follow. However, this particular one can be dealt with in text form. So let’s go ahead and remove it by using an anonymous function directly as the second parameter of foreach:

#define DMABATCHSIZE 100

void main()

{

int adcs[DMABATCHSIZE];

float temperatureCelcius;

float smoothedTemperatureCelcius;

ConfigureAdc(2, DMABATCHSIZE)

while (1)

{

GetAdcReadings(adcs, 2, DMABATCHSIZE); // channel=2

foreach(adcs, (adc)=>{

temperatureInCelcius = OffsetAndScale(adc, offset=4, slope=8.3);

smoothedTemperatureCelcius = Filter(temperatureCelcius, 10);

if (SampleEvery(15))

{

Display(FloatToString(smoothedTemperatureCelcius, "#.#"));

);

});

}

}It uses the lambda syntax '()⇒{}', which if you are not already familiar with, is worth getting used to. It’s a function without a name, so think of the ⇒ as being instead of the name of the function, the round brackets as the parameters, and the curly braces as the body of the function.

The next thing we want to do is get rid of the while loop, get rid of the indenting, and stop handling the data that is being passed from one function to another. None of them have anything to do with a thermometer. All those intermediate holding variables: adcs, temperatureCelcius, etc are all just symbolic connections. They are too much work when we just want to compose our thermometer from abstractions.

The while loop and all the indenting are there only because we have 'execution flow' tied in with our composition of abstractions. Basically we want to make control of execution flow another abstraction so that the thermometer can be built by just composing abstractions rather than writing executing code.

To do this we will first show how its done using monads. If you don’t know about monads just skip this section as we don’t need this step to understand our final goal. But for those who do understand monads, it is interesting to visit this step to see why the functional programming guys invented them. Then in the following step we will go to ordinary classes with ports instead of monads.

1.6.4. Brief detour: composing with monads

void main()

{

program = new ADC(channel=2, batchSize=100)

.foreach()

.OffsetAndScale(offset=4, slope=8.3)

.Filter(strength=10)

.SampleEvery(15)

.NumberToString(format="#.#")

.Display();

program.Run();

}Monads have allowed us to separate execution flow from composition flow. The composition flow is now a pure dataflow paradigm. Data will flow from the ADC to the display, so that is directly represented by the composition. How it executes is separated out, and we will go into how that works shortly. Let’s first understand the 'composition' and why this is so important.

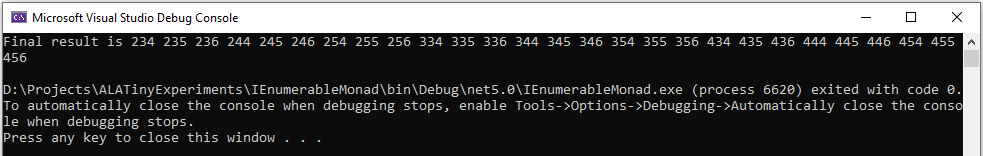

Even if you don’t understand how the monads work, you can see that syntactically the program is now very nice because all it does is compose instances of abstractions, and configure them with constants to be a thermometer. The composition is not declarative - it is dataflow, because dataflow suits how to describe the thermometer. If we let go of how it executes and just trust that the dataflow from one instance of an abstraction to the next works, the program becomes highly readable.

We are using the word 'composition' here to mean the things we are joining together in adjacent lines of code. It can also mean joining boxes with lines in a diagram. Think of a composition as analogous to the adjacent notes in a music score, which are always played successively. If the lines of code are statements or function calls, we are composing things for imperative execution by the CPU. If the lines of code are data processors, we are composing things for successive processing of data. The output of one passes directly to the input of the next.

If we are stuck with thinking in terms of imperative execution flow (the only way of thinking in the C language) we will need to try hard to let that go, and realize that in ALA, 'composition' can be any programming paradigm you want.

Also notice that the first statement just builds the program. Then the second statement sets it running. This two stage aspect of monads is common in the programming paradigms we will use in ALA. It is because the underlying execution flow is not the same as the flow of the programming paradigm. We first wire it up, and then we tell the wired up structure to 'execute'.

There is a second important difference from the while loop version. The while loop version handled the data itself. Each function returned the data which was stored in a local, otherwise useless, variable and then passed into the next function. The monad code doesn’t do that. Instead, it creates and wires together objects which will, at run-time, send the data directly from one to another via an interface. This does not mean that the abstractions themselves know anything about each other - they are still zero coupled. But the application now doesn’t have to deal with data. It just has to compose abstractions.

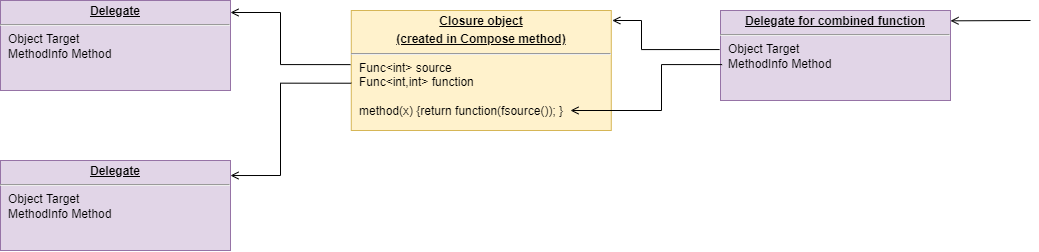

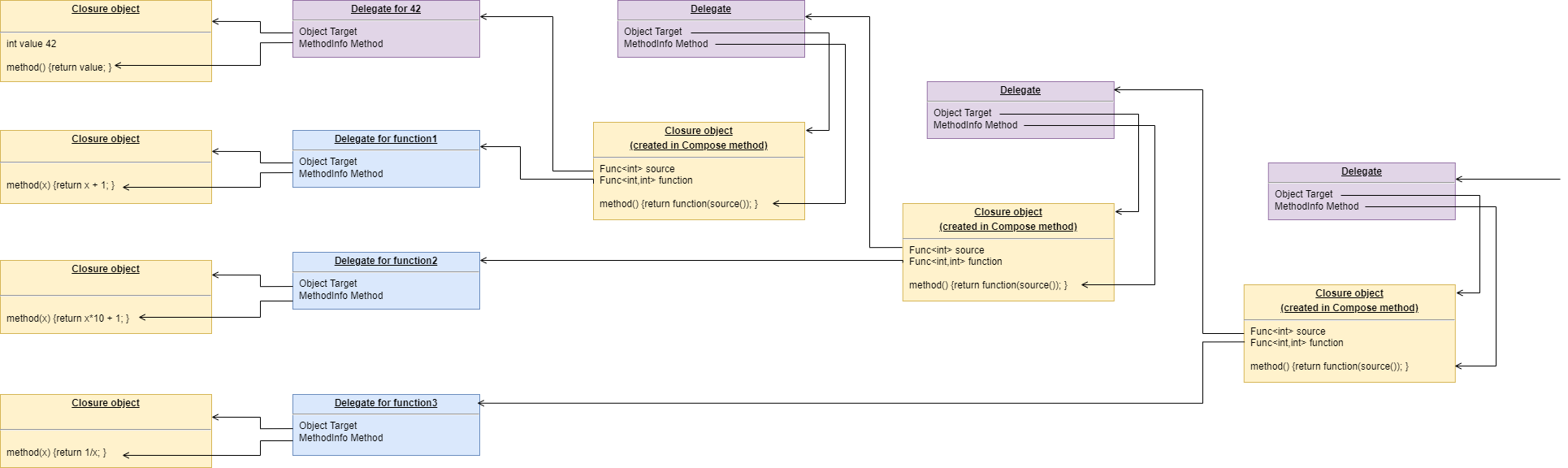

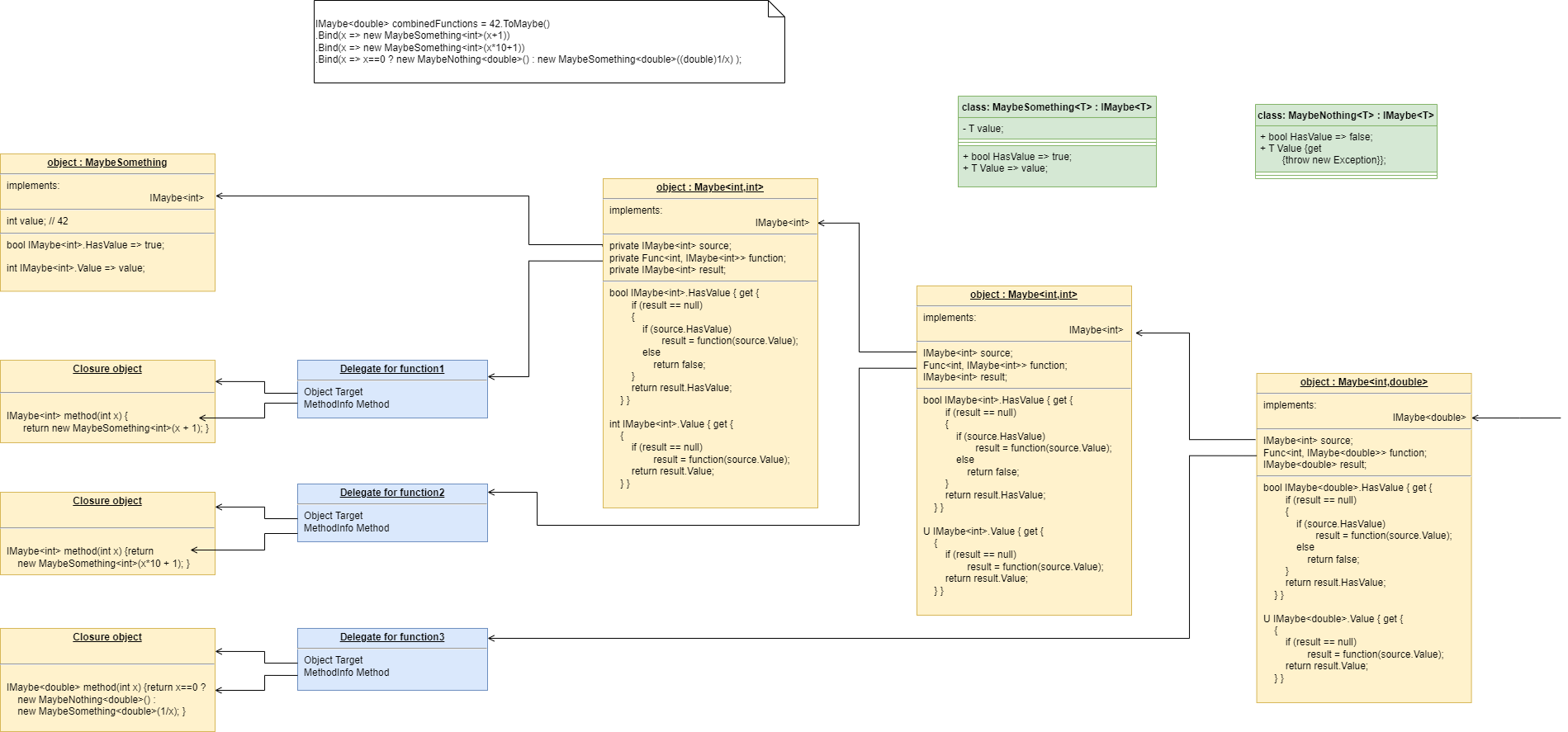

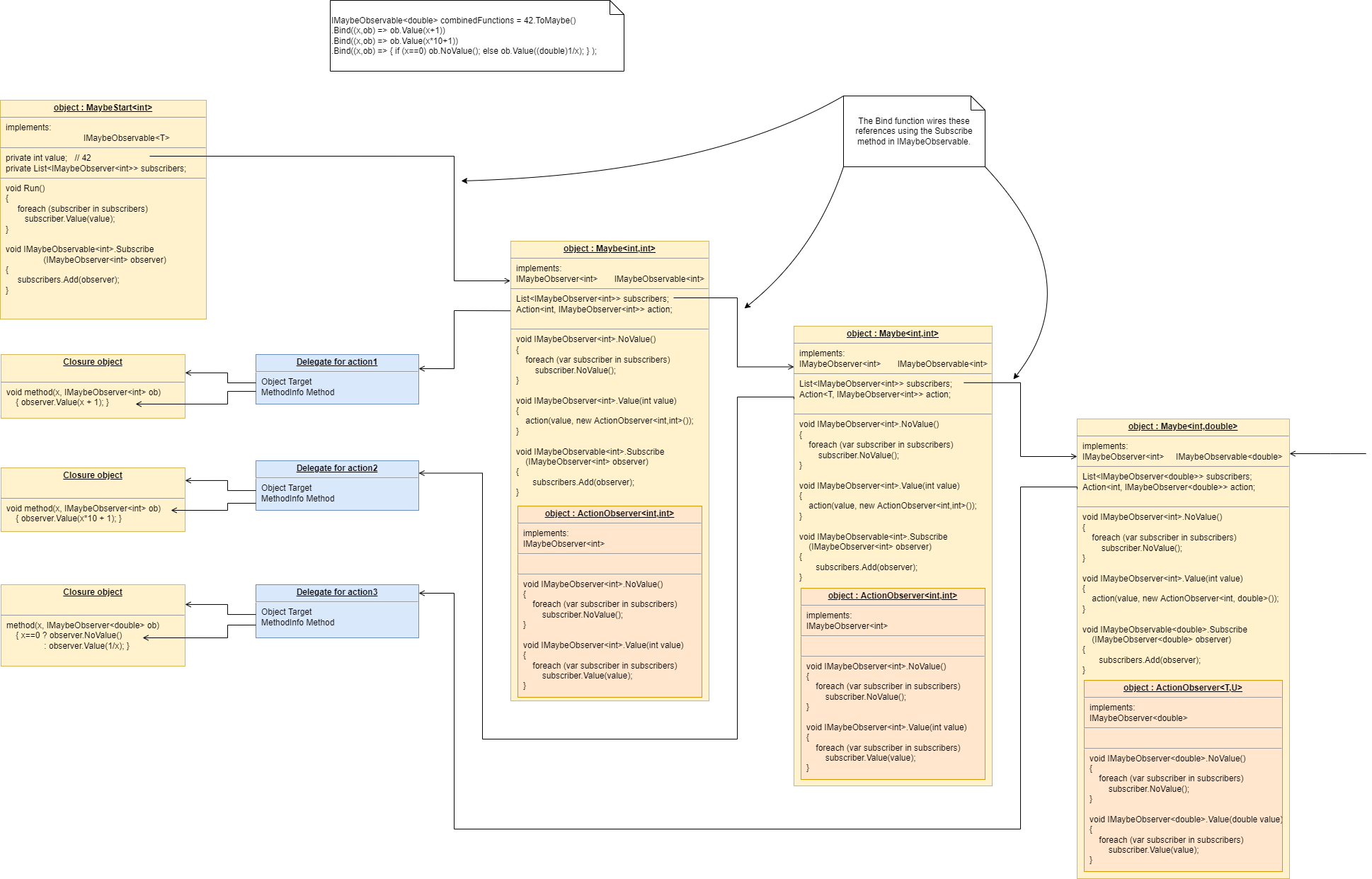

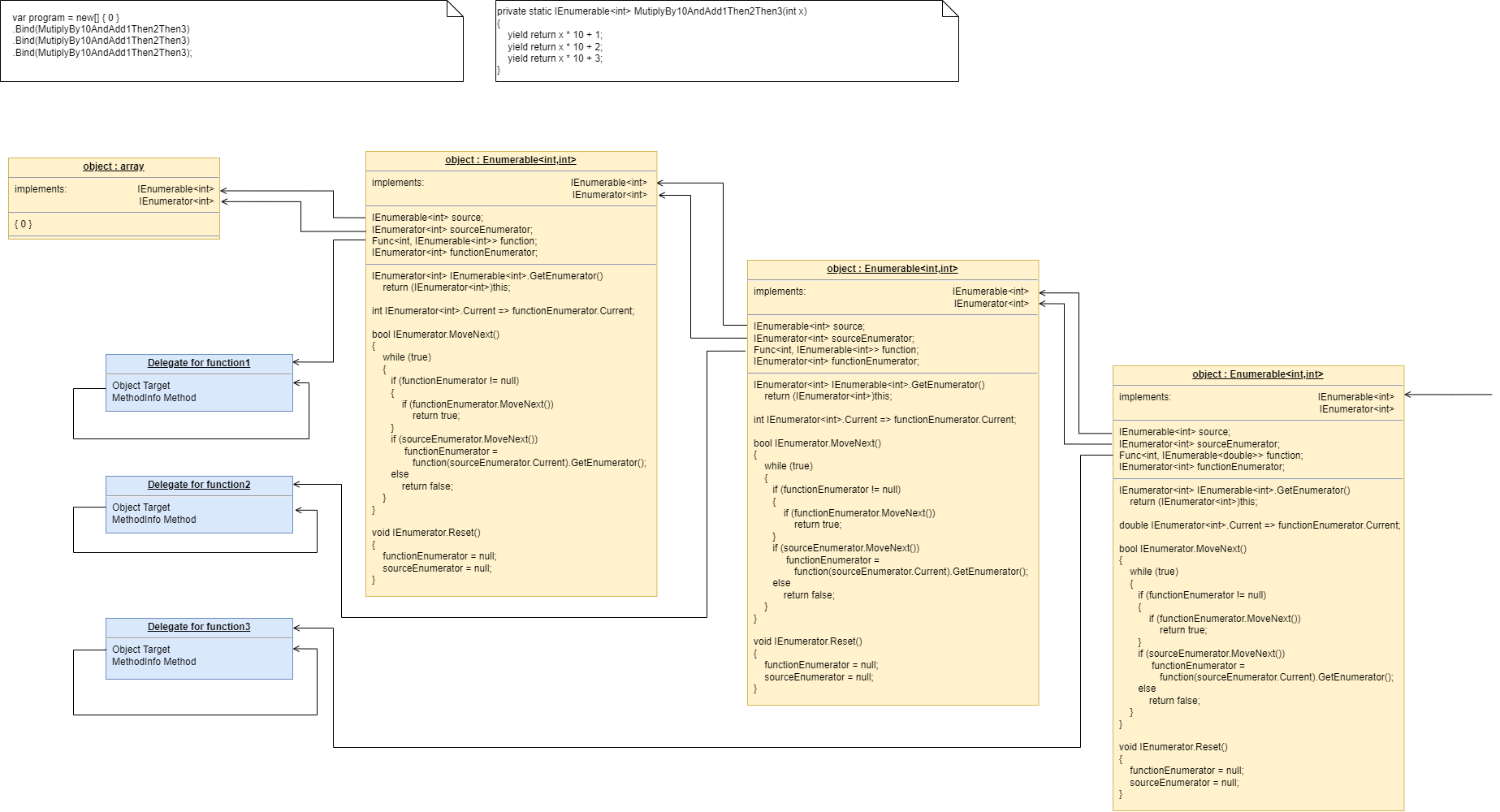

Lastly, here’s how monads actually execute - the execution model. Don’t worry if this doesn’t make sense.

Each function in the program statement (the function after each dot) executes once at the start. They are not executed when the program is running. Each of these functions first instantiates an object (using new), and secondly wires that object to the previous object.

The functions wire the objects together using an abstract interface. Common interfaces used for monads are IEnumerable or IObservable. These interfaces support iteration of data, by returning an IEnmerator or IObserver. If using the IEnumerator interface, there is a simple method in the interface that pulls data from the previous object. If using the IObseravble interface, there is a simple method in the interface that pushes data to the next object. So IEnumerable/IEnumerator and IObservable/IObserver as abstractions are pretty much just the concept of dataflow, the same abstract concept we will use in the ALA version.

1.6.5. Composing with plain objects

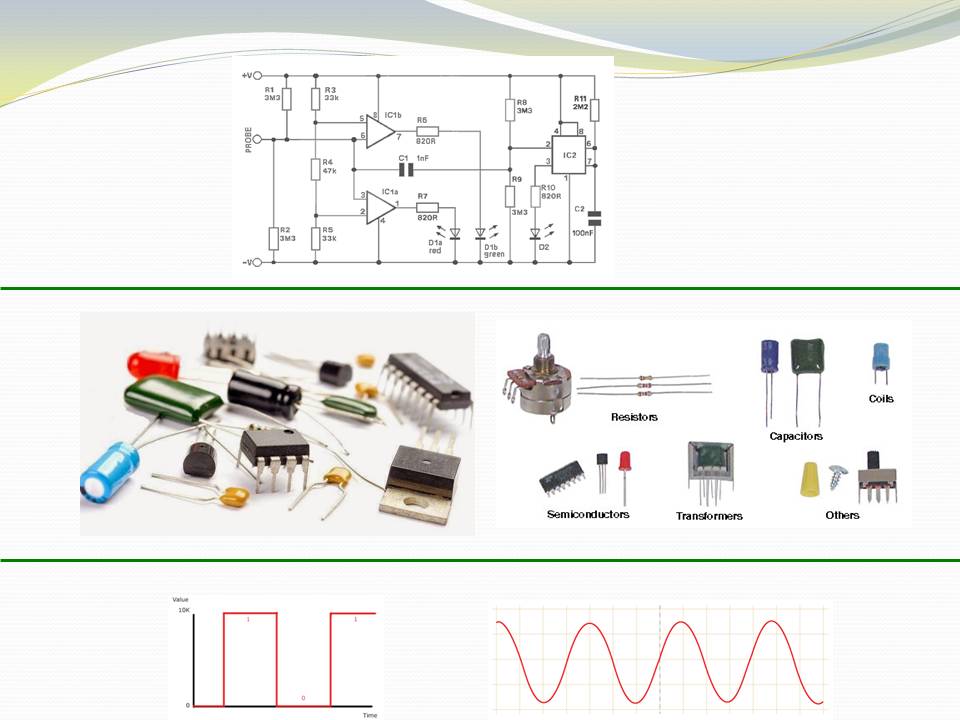

Here is the same program as above, but we are using plain classes with ports instead of monads. We use the 'new' keyword explicitly to create the instances of abstractions, and explicitly wire them together using a wiring function. It’s a little less succinct than the monad version, but the idea of "objects with ports that you wire together like electronic components" is easier to understand, and more versatile. It is necessary for developers to be able to write new domain abstractions, so this needs to be easy.

void main()

{

program = new ADC(channel=2,batchSize=100)

.WireIn(new Foreach())

.wireIn(new OffsetAndScale(offset=4, slope=8.3))

.wireIn(new Filter(strength=10))

.wireIn(new SampleEvery(15))

.WireIn(new NumberToString(format="#.#")

.wireIn(new Display());

program.Run();

}The wireIn method is doing dependency injection.

The WireIn method returns the new object, so it is possible to string WireIns together. This is called fluent syntax.

1.6.6. Using multiple programming paradigms:

Monads are generally not versatile enough to handle multiple ports of different programming paradigms, which we will want in ALA programs. Monads usually only support dataflow. But what if we want to also compose the UI, or event-driven? What if we want to compose transitions between states of a state machine? In ALA, we are able to do all this in the one application, in the same way - using whatever programming paradigms are the best way to express the requirements.

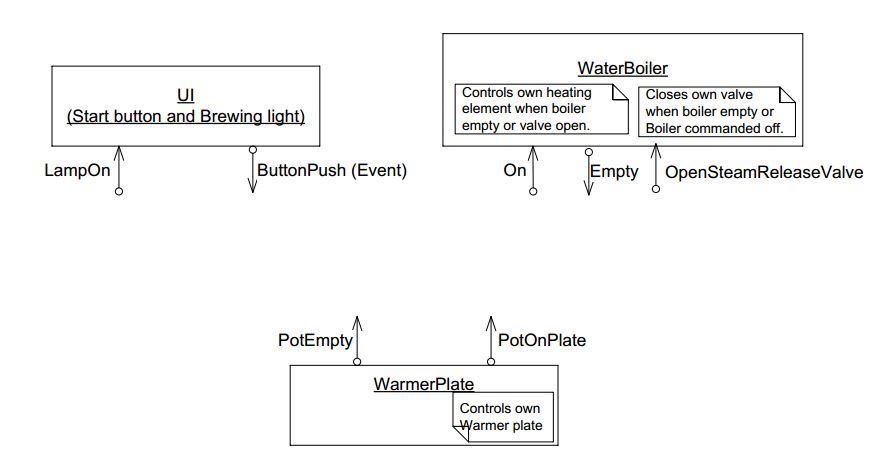

Some instances of abstractions will need to take part in multiple paradigms, such as both UI and dataflow. When we boil down the description of our requirements to pure composition, our composition will often be a graph of relationships. And when you have a graph, your composition is best described by a diagram.

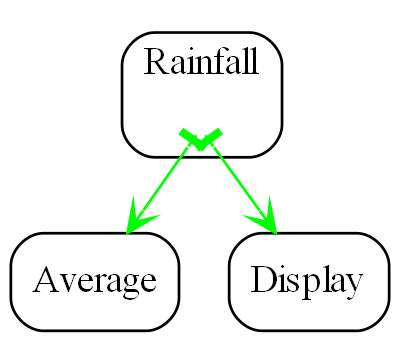

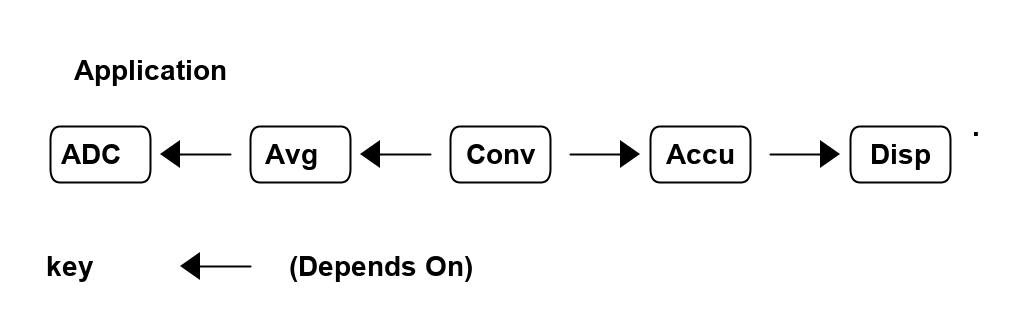

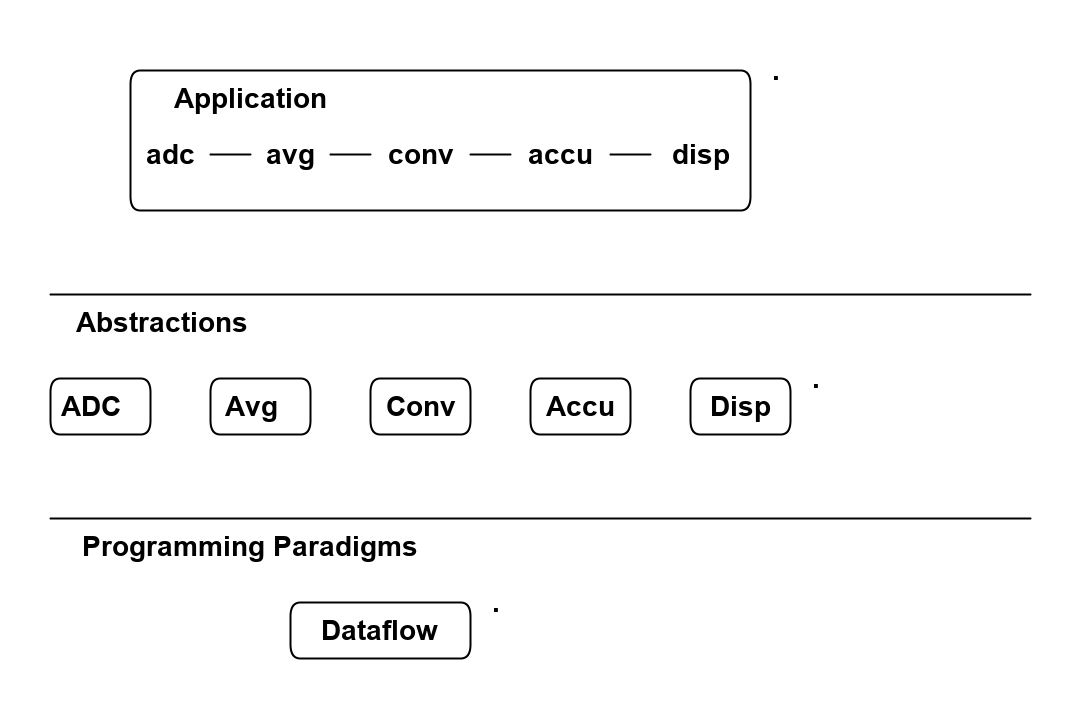

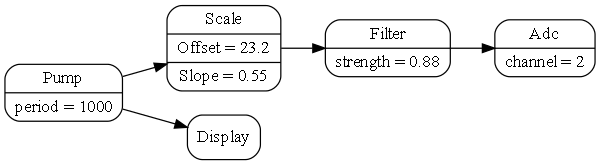

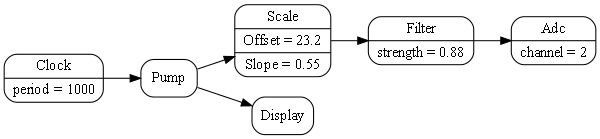

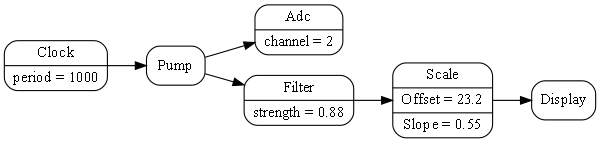

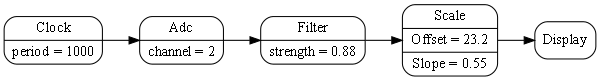

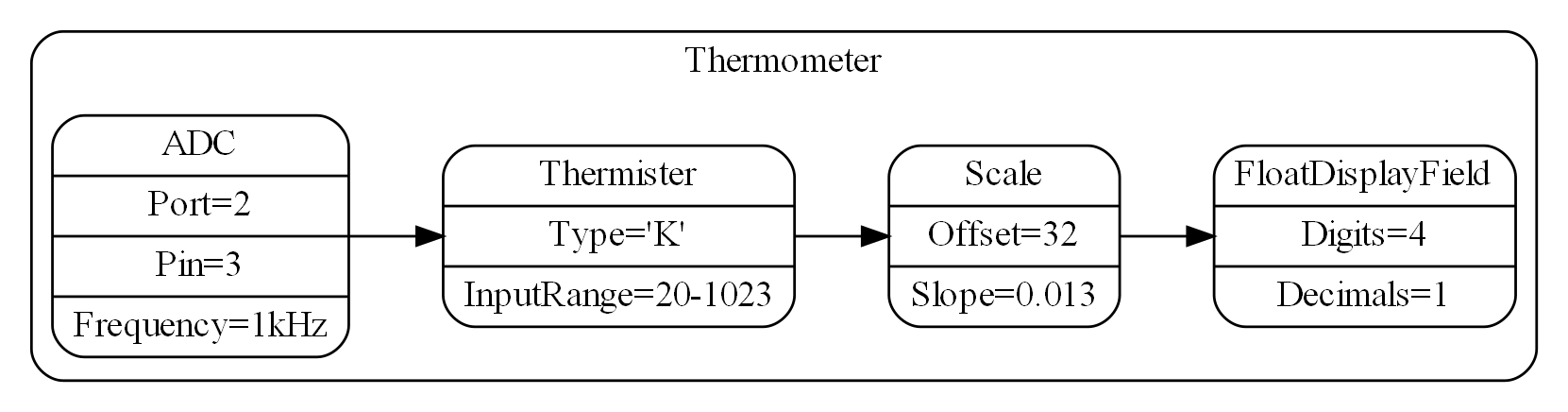

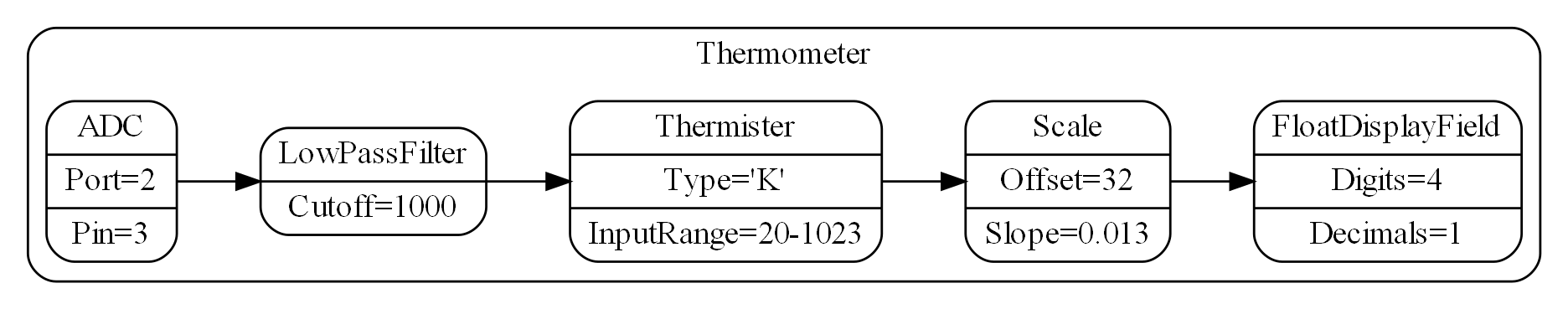

To illustrate this let’s add some UI to our thermometer: